Misusing Microsoft Defender For Domain Blocking Bypass Shenanigans

I've mentioned previously that in the mornings I tend to wake up by looking at my PFAnalytics dashboard whilst letting the coffee soak in.

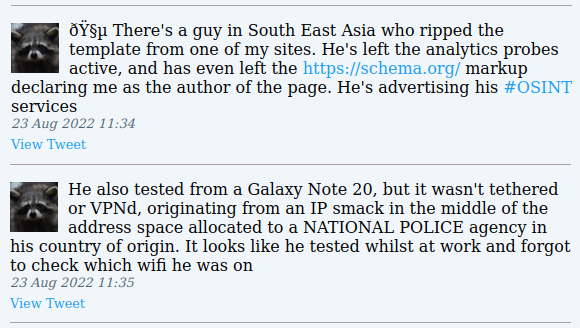

That habit occasionally leads me to noticing something odd and getting curiousity-sniped into going on fun adventures, ranging from the extreme to the utterly baffling:

This post was driven by something (far) less OSINT'y and falls not nearly so far along the WTF spectrum, but still fairly interesting.

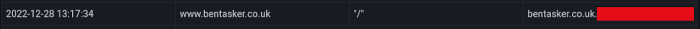

In late December, I sat down (with my coffee), opened the dashboard and saw that a request had been logged with a strange Referer:

The referring domain begins with my domain name, but has additional labels appended (i.e. bentasker.co.uk.example.com rather than bentasker.co.uk). The redacted portion of the name does not contain a domain that's immediately recognisable, although it does use a TLD which makes you think of Microsoft.

For avoidance of doubt, the issue that I'm going to describe has been reported to Microsoft (hence the time difference between seeing it and writing this post) and they've now assessed that it does not require immediate fixes (though the team responsible for the product may choose to fix it later) and said that it's OK to write about it.

Although the affected domain is relatively easy to find (in fact, I've found a couple), the impact isn't really on Microsoft but on others, so out of an abundance of caution, I'm not going to publish the actual domain name(s) and will instead refer to example.com.

The Source of The Data

For those who aren't familiar with HTTP, Referer is a request header indicating where the user agent is coming from (e.g. which page they followed a link on to reach the current one). Quite simply, where were they referred from?

The header, as you may have noticed, was misspelt in the original RFC.

On the wire a HTTP/1.1 request might look something like the following

GET /page-2208131741-Constructing-a-hyperlink-to-share-content-onto-Reddit-Misc.html HTTP/1.1

Host: snippets.bentasker.co.uk

Referer: https://www.bentasker.co.uk/posts/blog/general/using-kodi-as-a-dlna-source-for-a-roku-stick.html

User-Agent: Ben-Typing

(In practice there will usually be more headers).

The header is optional, user-agents can opt not to send it and sites can aksi instruct them not to send it through use of Referrer-Policy headers (note that Referrer is spelt correctly in those headers, which totally never leads to mistakes).

Referer information can be useful/interesting, but also has the potential to be a privacy nightmare (depending on where exactly the user has been referred from).

All that really matters for the purposes of this post, though, is that referrer information was available and showed a weird domain.

What Analytics Shows

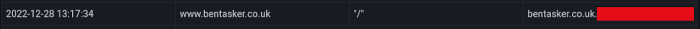

Going back to that analytics screenshot

It shows the following:

- There was a request for

https://www.bentasker.co.uk/at 13:17:34 on 28 Dec 2022 - That request was referred from

bentasker.co.uk.example.com(PFAnalytics only stores the referring domain, not the path)

I don't normally pay much attention to referral information, although it's sometimes interesting to see where traffic's coming from, I'm not normally too fussed.

When a referrering domain contains my domain name though, alarm bells start to ring.

Normally, those show up as a result of someone trying to hijack content - serving my content from their own domain, with ads attached. It seems to be less common on the WWW now, but still sometimes happens with my eepsite and onion addresses (and of course, there's Tor2Web, but that's a whole other issue).

Checking Who Owns It

When a suspicious domain appears, it can be quite tempting to just visit it.

But, it's important not to rush these things: the data we have originates from a request generated and placed by persons unknown. It's extremely unlikely, but possible that the request has been placed specifically to get me to visit the domain (whether for an attempted drive-by download or as the first step in some other plan).

So, I started by using whois to see who owns the domain:

Registrar: MarkMonitor

...

Registry Registrant ID: qJ2Uw-9Hf9z

Registrant Name: Domain Administrator

Registrant Organization: Microsoft Corporation

Registrant Street: One Microsoft Way

Registrant City: Redmond

Registrant State/Province: WA

Registrant Postal Code: 98052

The record is quite long, but it's Microsoft all the way down, which prompted a bit of a "duhhhh" moment: the domain falls under the TLD .ms.

Fun fact: I initially assumed that .ms was a gTLD that Microsoft had acquired, but it's actually the country code TLD for Montserrat and Microsoft just like to use it (for obvious reasons).

In some ways, the ownership makes things weirder: what are MS doing with a subdomain named after my site?

What It Does

The next thing to do, then, was to find out what the site actually does. There was still a remote possibility that this was a malicious attempt (by someone who'd managed to pop something at Microsoft), so I tested by running a curl from a safe place.

curl -v https://bentasker.co.uk.example.com/

This resulted in a HTTP 200 and served up a small HTML response body

<html>

<head>

<title>Continue</title>

<script type="text/javascript">

function OnBack() {}

function DoSubmit() {

var subt = false;

if (!subt) {

subt = true;

document.hiddenform.submit();

}

}

</script>

<link rel="icon" type="image/png" href="data:image/png;base64,iVBORw0KGgo=" />

</head>

<body><form name="hiddenform" id="hiddenform" action="https://bentasker.co.uk/" enctype="application/x-www-form-urlencoded" method="GET">

<noscript>

<input type="submit" value="Click here to continue" />

</noscript>

</form>

<script src="<redacted>"></script>

<script type="text/javascript">

var redirectForm = document.hiddenform;

redirectForm.setAttribute("action", redirectForm.getAttribute("action") + window.location.hash);

SessionContextStoreHelper.storeContextBeforeAction("<redacted>#action=store&contextData=https%3A%2F%2Fbentasker.co.uk%2F", DoSubmit, true);

</script>

(Interestingly, the response headers indicate that the server in use is OpenResty)

The page runs javascript which writes some context information to the browser's Session Storage and then redirects the user onto the target domain (in this case https://bentasker.co.uk) by submitting the hidden form.

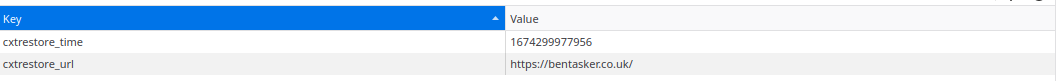

Relatively happy that the page shouldn't serve any nasties, I visited the domain in a browser and did a little poking to see what storeContextBeforeAction() was actually storing:

Nothing overly thrilling there, and it certainly doesn't answer the new question: What is the point in all this?

I started to experiment a bit to see how the redirected target can be manipulated.

If we add a filename to our request (i.e. https://bentasker.co.uk.example.com/robots.txt), then it'll be included in the resulting redirect

<form name="hiddenform" id="hiddenform" action="https://bentasker.co.uk/robots.txt" enctype="application/x-www-form-urlencoded" method="GET">

It's not just my domain, or the .co.uk TLD either, we can even have it redirect us to Google search results:

curl "https://www.google.com.example.com/search?q=does+this+search"

I also wondered whether it was possible to include HTML in the URL path in order to inject it into the response body:

openssl s_client -connect bentasker.co.uk.example.com:443 -servername bentasker.co.uk.example.com

GET /foo"><a name="foo">a</a> HTTP/1.1

Host: bentasker.co.uk.example.com

User-Agent: me

But, it's correctly escaped

<form name="hiddenform" id="hiddenform" action="https://bentasker.co.uk/foo%22%3E%3Ca%20name=%22foo%22%3Ea%3C/a%3E" enctype="application/x-www-form-urlencoded" method="GET">

The same is true if you instead try to inject a quote into the Host header.

What It's Used For

This was all very interesting, but why is it there, and what is it used for?

A bit of searching led me to some Microsoft documentation mentioning the static part of the domain (so example.com in the examples above), which revealed that the domain is used for Microsoft's Defender for Cloud Apps (DCA).

The behaviour that I've shown above, it seems, is not the behaviour that a DCA user would experience.

Instead (depending on the mode used by their organisation), the service acts as a Cloud Security Access Broker (CSAB) and as a reverse proxy to serve whatever is being requested, rewriting cookies etc as necessary

In Microsoft's words

It provides rich visibility, control over data travel, and sophisticated analytics to identify and combat cyberthreats across all your Microsoft and third-party cloud services.

Which undoubtedly ticks a few boxes for more than a few people.

Misusing It

The aim of this post, though, isn't to sell someone else's security products, it's to misbehave.

Now that we know what we're looking at, it's time to think about how it can be put to unintended uses.

Because I'm not a DCA user, I don't get the reverse proxy experience (which might have its own problems - I'd quite like to play around with that too at some point), instead my browser is redirected to the original domain.

That redirect behaviour, though, is problematic: the redirect destination is entirely under the control of whoever writes the URL and as such acts as an Open Redirect.

However, the model here is slightly different to that of a traditional open redirect.

Normally when we talk about open redirects we think about links to known/trusted sites resulting in a redirect to an unknown one. For example, https://www.bank.example?u=evilsite.example redirecting to evilsite.example: Users see the link to bank.example and click it, but are quietly redirected to evilsite.example (which might be designed to look exactly the same in order to harvest credentials).

That's not the case for DCAS: a user hitting foo.example.com won't recognise the end of the domain name and, even if they don't read that far, will still end up on the domain that they expected to visit, so there's limited opportunity to use it to fool users.

So, how can we abuse this?

Managing Social Groups

Jumping topic for a moment, I'd like to talk a little about ensuring safety on social networks, forums and (more or less) anything hosting User Generated Content (UGC).

Anyone even vaguely familiar with moderation in these venues will be aware that things sometimes spread very quickly, with good and bad going viral in roughly equal proportion. This virality (and the propensity of bad actors to persist in re-posting removed content), unfortunately, applies to malicious content and links too.

Moderators of busy platforms can't keep pace with the rate of posts, so on their own, wouldn't stand a chance of continuously identifying where links to https://evilsite.example/credit-card are being reposted.

This issue is one of the reasons that UGC hosting services often use outlinks: you've probably seen implementations of this by Twitter (t.co), forum software and perhaps Reddit (out.reddit.com). Although services undoubtedly also use these for analytics purposes, they help satisfy important safety requirements too.

URLs posted on the platform are transparently replaced with links to the platform's outlink service, which then redirects them on to the destination (crucially, they're not an open redirect: the outlinks can't simply be hand edited to change to a destination of choice).

This allows platforms to easily

- Blocklist known malicious links, preventing users from being redirected on at all

- Introduce an interstitial to warn users of potentially dodgy links

- (potentially) see which users visited the link (and so identify those who may need further account security support)

With these systems in place, moderation teams no longer need to catch every posting of https://evilsite.example/credit-card: the platform will replace it with a link to the outlink service and so they just need to disable exiting via that outlink.

Bigger platforms, like Twitter and Facebook, go even further: when a link is posted, the domain is also checked against lists of known-bad domains and if necessary a block or an interstitial is automatically added. So, a moderator doesn't necessarily need to have manually reviewed/blocklisted a domain in order for users to be protected from malicious links, in fact, they don't even need to know that it's currently being posted.

Bypassing Automated Checks with Cloud Defender

The core problem with DCA providing an Open Redirect is that it can be used to circumvent some of the safeguards that outlink services provide.

If ImYourBankHonest.evil.example is on a list of known dangerous sites, then sharing it on most social media platforms won't work and users will instead see a warning that the domain is unsafe.

However, the domain ImYourBankHonest.evil.example.example.com can be shared, because it won't be on the safety lists.

Realistically, that malicious domain probably won't be blocked until a moderator spots a report and takes action. Depending on the intensity of the campaign that might be many, many clicks later.

Redirection Methods

Now, you might be tempted to think that this isn't all that different to URL shortener services such as Bit.ly, but, there's a fairly critical difference in the way that those services operate.

If we make a request for a bit.ly shortened URL, the result is a HTTP redirect:

$ curl -v https://bit.ly/2XT8wqD

> GET /2XT8wqD HTTP/2

> Host: bit.ly

> user-agent: curl/7.81.0

> accept: */*

>

< HTTP/2 301

< server: nginx

< date: Sat, 21 Jan 2023 12:11:19 GMT

< content-type: text/html; charset=utf-8

< content-length: 115

< cache-control: private, max-age=90

< location: https://www.bentasker.co.uk/

The status code indicates that we're being redirected and the location header tells us where to go.

If you shorten one of your own URLs and post it to social media, you will often see the social media platform's bot appear in your access logs, because the platform follows links and redirects in order to see where they go (as well as to do things like check for OpenGraph markup etc).

So, even if I try to obfuscate by posting https://bit.ly/2XT8wqD, the social media platform can see that the eventual destination domain is www.bentasker.co.uk and check that against it's known bad list.

The problem is, Microsoft's DCA doesn't use HTTP redirects.

Instead it redirects using javascript, so outlink checkers will receive a HTTP 200 and some HTML, potentially never knowing that a user will be redirected and never checking the true destination against the list of known bad domains. Checkers could use something like headless chrome to check for this, but (AFAIK) none of the big platforms currently do (I'd expect to see them in my analytics if they did).

Some UGC services might also perform pattern matching in the hope of catching evilsite.example even if it's embedded into another domain name. However, this can be thwarted by using a link shortener to obfuscate the destination and then using the DCA domain to refer onwards to that: bit.ly.example.com/2XT8wqD works perfectly well.

Summary

Microsoft's Defender for Cloud Apps uses a publicly accessible server which, by default, provides an Open Redirect (which is a little awkward, given this).

Whilst the traditional Open Redirect threat-model doesn't apply to the service, it can be mis-used in order to facilitate posting of malicious links on other sites and platforms.

The way that the redirect is implemented makes it difficult - even for platforms with "smart" outlink capabilities - to identify that a redirect will occur, and therefore to identify the true destination. Although pattern matching can be used against the domain, it's possible to circumvent this by using the Open Redirect to first reach a link shortener.

This means that the DCA domain(s) can be used to hamper the efforts of outlink services which check links against lists of known bad domains, effectively circumventing the protections that they offer.

SSL Certs

Going on a quick tangent, you may have noticed that my earlier requests used https:// and yet weren't met with a certificate warning: This is because DCA presents certificates signed by a publicly trusted certificate authority (the Azure CAs: Microsoft Azure TLS Issuing CA).

In the bad old days of on-site HTTPS MiTM, you'd often have something like Squid dynamically generate certificates to match the destination domain, signing them with it's own CA (which needed to be installed and trusted on the client devices). That approach requires that the signing key be constantly available, so it would be absolute nightmare fuel if Microsoft were using the same technique with a publicly trusted CA.

If we pull the certificates, though, we can see that they're not generated dynamically and are in fact wildcard certificates issued against various TLD variations:

$ echo | openssl s_client -connect bentasker.co.uk.example.com:443 -servername bentasker.co.uk.example.com 2>&1 | openssl x509 -noout -text

Validity

Not Before: Jun 17 17:55:29 2022 GMT

Not After : Jun 12 17:55:29 2023 GMT

Subject: C = US, ST = WA, L = Redmond, O = Microsoft Corporation, CN = *.co.uk.example.com

$ echo | openssl s_client -connect bentasker.co.uk.example.com:443 -servername bentasker.ly.example.com 2>&1 | openssl x509 -noout -text

Validity

Not Before: Jun 18 21:58:07 2022 GMT

Not After : Jun 13 21:58:07 2023 GMT

Subject: C = US, ST = WA, L = Redmond, O = Microsoft Corporation, CN = *.ly.example.com

This means that the service doesn't need to have the private key of a widely trusted CA constantly available to it (phew), but does mean that there will be domains which DCA simply can't cater to.

Some can't be served because a certificate hasn't been issued to match its TLD, but there's another failure case, because RFC 2818 says that a wildcard in a SSL certificate can only match one label:

Names may contain the wildcard character * which is considered to match any single

domain name component or component fragment. E.g., *.a.com matches foo.a.com but

not bar.foo.a.com. f*.com matches foo.com but not bar.com.

What this means is that a certificate for *.co.uk.example.com is valid for bentasker.co.uk.example.com but not for www.bentasker.co.uk.example.com.

If we make a connection using www.bentasker.co.uk.example.com, we see that rather than presenting an invalid certificate, the service returns no certificate at all:

$ echo | openssl s_client -connect bentasker.co.uk.example.com:443 -servername www.bentasker.co.uk.example.com 2>&1 | openssl x509 -noout -text

Could not read certificate from <stdin>

4057D2D5257F0000:error:1608010C:STORE routines:ossl_store_handle_load_result:unsupported:../crypto/store/store_result.c:151:

Unable to load certificate

This feels like it might present a bit of an obvious gap in DCA's coverage, so obvious, in fact that the system simply must have a way to cater to those needs.

The three ways I can think of are

- Send via HTTP, avoiding the need for certificates (but with severe implications)

- Rewrite the URL to include a service specific query-string var to specify the subdomain

- They issue special certificates for certain domains

The second definitely seems more likely than the first, but the third is also an interesting possibility.

Microsoft's DCA documentation clearly lists SalesForce as being supported. Salesforce serves each of its customers from dedicated subdomains, so we know that that traffic could not be served with the certificates that we've seen so far.

Is there perhaps a special Salesforce specific certificate in Microsoft's collection?

$ echo | openssl s_client -connect test.lightning.force.com.example.com:443 -servername test.lightning.force.com.example.com 2>&1 | openssl x509 -noout -text

Validity

Not Before: Jul 13 22:07:50 2022 GMT

Not After : Jul 8 22:07:50 2023 GMT

Subject: C = US, ST = WA, L = Redmond, O = Microsoft Corporation, CN = *.lightning.force.com.example.com

Well... would you look at that...

A quick search with crt.sh indicates that Microsoft has actually created a lot of dedicated certificates, with some fairly unexpected domains in there (like jjduffyfuneralhome.com) along with some which it's probably not a good idea to allow employers to intercept (e.g. youngwomenshealth.org), especially given some current attitudes to women's healthcare in the US.

Whilst it's, undoubtedly, a better option than dynamic generation, issuing individual certificates in that way also doesn't really feel like it's very scalable.

I did toy with the idea of running a full test of which TLDs they have certificates for, but once you factor in gTLDs the list of things to test is quite long. Picking some at random though, I only found a few that aren't covered

-

.bananarepublic(seriously, it's a proposed brand TLD) .eurovision.diamonds.democrat.republican.secure

Avoiding / Rectifying

DCA is primarily intended to serve members of an organisation who have enabled the service.

Arguably, then, there's no good reason that it should ever need to handle arbitrary domains from unauthenticated users: The redirect should be disabled and perhaps replaced with an appropriate error message instead.

There's also no good reason that those domains would ever legitimately be posted to social media, so MS could also talk to the big platforms to have them added to outlink blocklists. This wouldn't be a solution on it's own though because it fails to provide coverage for smaller UGC platforms.

Conclusion

It's not the most severe, or the sexiest of issues, but still feels worth flagging: Open Redirects of this nature can have a severe impact on the trust & safety mitigations that other platforms put in place to protect their users.

If I had access to an authenticated DCA session, there are a few things I'd like to test about the behaviour of the proxying solution: all proxied domains will (as far as the browser is concerned) go to subdomains of the same domain (example.com) which might have interesting effects on the cross-origin restrictions that browsers look to enforce.

It'd also be interesting to see how well the solution handles cookies set/accessed via Javascript, the solution appears to be built using OpenResty (a build of Nginx with LUA and other fun stuff baked in), so it's possible that rewrites rely entirely on Nginx's proxy_cookie_domain, leaving ample room for mischief.

Microsoft seem to have chosen a work-intensive route of handling SSL certificates for the service, and that's very much to their credit as a fully automated solution would require important (and widely trusted) key-matter to remain available (and therefore exposed).

I do hold some reservations about the way that Defender for Cloud Apps appears to operate, it seems a little short-sighted to teach users (and document!) that a domain can be considered OK if it ends in the relevant DCA domain name.

If Microsoft were ever to lose control of that domain, there'd be a lot of potential for problems, given that it provides a fantastic man-in-the-middle position for use against a wide range of solutions commonly used by enterprises.