Examining The Behaviour of a Self Authenticating Mastodon Scraper

In a recent post I explored ways to impose additional access restrictions on some of Mastodon's public feeds.

When checking access logs to collect the IPs of scrapers identified by the new controls, I noted that one bot looked particularly interesting.

Although the new controls rejected other bots with the reason mastoapi-no-auth (which indicates that they hadn't included an authentication token in their request) the bot in question was instead rejected with the reason mastoapi-token-invalid.

This bot, unusually, was providing a token, just not a valid one.

This, as you might expect, elicited an almost irresistable sense of curiosity: what was being provided as a token, and why was it being presented?

In the process of looking into the bot's behaviour, I learnt a bit more about Mastodon's API and stumbled across a (apparently known) issue with Mastodon's security posture. This post shares both.

Background

In my original post, I noted some basic information about the bot

The IP 129.105.31.75 is in a range associated with Northwestern University, if this is related to research the very least they could do is disclose it in the user-agent. It seems to visit once every 24 hours, so I may yet look into capturing more information about it.

At the time of writing this, my assumption was that the bot author had probably built their requests based on observation of something else and had accidentally left a token hardcoded into the request headers.

Because the bot visits relatively infrequently, I had to wait for its next visit to be able to take captures and see what it was including in the Authorization header.

IP Ownership and Usage

While I waited I did a little more background research on the IP itself.

The IP is part of AS103, operated by the US's Northwestern University.

The IP conveniently has an associated PTR record (sometimes known as reverse DNS or rDNS):

$ host 129.105.31.75

75.31.105.129.in-addr.arpa domain name pointer kibo.soc.northwestern.edu.

There's a bi-directional relationship, with the name resolving back to the same IP

$ host kibo.soc.northwestern.edu

kibo.soc.northwestern.edu has address 129.105.31.75

Note: Always check both directions, there's absolutely nothing to stop an IP's owner creating a PTR record pointing to www.whitehouse.gov, it doesn't mean that the Whitehouse has anything to do with whatever mischief the IP owner is involved in (in fact, people sometimes even use PTR records to do fun things with traceroute).

Accessing http://kibo.soc.northwestern.edu simply results in a default install page, but searching for the name returns a Community Data Science page detailing the system's usage:

So, we now know that this is a multi-tenant research server used by The Community Data Science Collective. Their homepage reveals that although this server is hosted at Northwestern, the group is comprised of students and faculty from multiple universities.

Preparing Packet Captures

With some idea of who was looking, it's time to start investigating how they're looking (and what at).

My WAF exception graphs show that the bot generates mastoapi-token-invalid exceptions twice daily: once around 04:40 and then another between 07:00 and 09:00.

Checking access logs, though, reveals a much broader pattern of behaviour: the bot visits quite regularly through the early hours of the morning, but doesn't generate exceptions because it's hitting endpoints that aren't currently subject to the WAF's new restrictions.

There are two main options available to capture the bots behaviour

- Adjusting my log format to capture the

Authorizationheader - Run a packet capture somewhere upstream

Option 1 is easy to implement, but is also quite problematic: It's not just the bot's authentication headers which would be written into logs, valid authentication tokens from legitimate clients would also be captured, and would persist until the logs age out (or are manually removed, sacrificing logging for that period). It's an accident waiting to happen.

Packet captures can be removed without consequence and also have the benefit of showing the bot's request in its entirety (it's the Authorization header that I'm interested in, but who knows what else might be in there).

My Mastodon docker containers sit behind a nginx reverse proxy, so there's plaintext communication on the loopback interface between them - as near a perfect location for capturing as you're ever likely to get.

Initially, I had intended to run a rolling packet capture with tcpdump's -G option, but I ran into AppArmour related issues and was running out of time, so implemented the rotation myself:

#!/bin/bash

cd /mnt/bigvol

captures=`ls -1 *.pcap`

killall -15 tcpdump

bash -c "nohup /usr/sbin/tcpdump -i any -s0 -w /mnt/bigvol/masto.`date +'%Y-%m-%dT%H.%M.%SZ'`.pcap port 3000 &"

for capture in $captures

do

gzip $capture

done

This was added to cron and scheduled to run hourly

0 * * * * /mnt/bigvol/start_tcpdump.sh

In order to ensure that its behaviour wasn't restricted, I also excluded the bot from my WAF protections (actually, that's a part truth: I went to bed, checked captures the next morning, realised I'd been a muppet, added the exclusion and then waited again).

Checking Logs

The next morning, I had loglines showing that the bot had visited overnight

129.105.31.75 - - [09/Jan/2023:02:38:11 +0000] "POST /oauth/token HTTP/1.1" 200 143 "-" "Python/3.6 aiohttp/3.6.2" "-" "mastodon.bentasker.co.uk" CACHE_- 0.019 mikasa - "-" "-" "-"

129.105.31.75 - - [09/Jan/2023:02:56:45 +0000] "GET /api/v1/instance/peers HTTP/1.1" 200 78305 "-" "Python/3.6 aiohttp/3.6.2" "-" "mastodon.bentasker.co.uk" CACHE_- 0.222 mikasa - "-" "-" "-"

129.105.31.75 - - [09/Jan/2023:02:56:45 +0000] "GET /api/v1/instance/peers HTTP/1.1" 200 78305 "-" "Python/3.6 aiohttp/3.6.2" "-" "mastodon.bentasker.co.uk" CACHE_- 0.113 mikasa - "-" "-" "-"

129.105.31.75 - - [09/Jan/2023:03:56:26 +0000] "GET /api/v1/instance HTTP/1.1" 200 1679 "-" "Python/3.6 aiohttp/3.6.2" "-" "mastodon.bentasker.co.uk" CACHE_- 0.053 mikasa - "-" "-" "-"

129.105.31.75 - - [09/Jan/2023:03:56:26 +0000] "GET /api/v1/instance HTTP/1.1" 200 1679 "-" "Python/3.6 aiohttp/3.6.2" "-" "mastodon.bentasker.co.uk" CACHE_- 0.018 mikasa - "-" "-" "-"

129.105.31.75 - - [09/Jan/2023:04:37:15 +0000] "GET /api/v1/timelines/public?limit=80&local=true HTTP/1.1" 200 23776 "-" "Python/3.6 aiohttp/3.6.2" "-" "mastodon.bentasker.co.uk" CACHE_- 0.715 mikasa - "-" "-" "-"

129.105.31.75 - - [09/Jan/2023:04:37:15 +0000] "GET /api/v1/timelines/public?limit=80&local=true HTTP/1.1" 200 23776 "-" "Python/3.6 aiohttp/3.6.2" "-" "mastodon.bentasker.co.uk" CACHE_- 0.515 mikasa - "-" "-" "-"

129.105.31.75 - - [09/Jan/2023:05:35:26 +0000] "GET /static/terms-of-service.html HTTP/1.1" 404 1173 "-" "Python/3.6 aiohttp/3.6.2" "-" "mastodon.bentasker.co.uk" CACHE_- 0.019 mikasa - "-" "-" "-"

129.105.31.75 - - [09/Jan/2023:05:35:27 +0000] "GET /static/terms-of-service.html HTTP/1.1" 404 1173 "-" "Python/3.6 aiohttp/3.6.2" "-" "mastodon.bentasker.co.uk" CACHE_- 0.018 mikasa - "-" "-" "-"

129.105.31.75 - - [09/Jan/2023:07:37:30 +0000] "GET /.well-known/nodeinfo HTTP/1.1" 200 129 "-" "Python/3.6 aiohttp/3.6.2" "-" "mastodon.bentasker.co.uk" CACHE_- 0.018 mikasa - "-" "-" "-"

129.105.31.75 - - [09/Jan/2023:07:37:31 +0000] "GET /nodeinfo/2.0 HTTP/1.1" 200 210 "-" "Python/3.6 aiohttp/3.6.2" "-" "mastodon.bentasker.co.uk" CACHE_- 0.163 mikasa - "-" "-" "-"

The loglines themselves don't contain anything exciting, but the timestamps provide a useful reference for use when looking through the captures.

The Requests

As we can see in the logs above, the first thing that the bot does each day, is to place a POST to /oauth/token. This is fairly curious because that endpoint is used to obtain an API token and requires details of a registered application.

This raises a few questions

- Is the bot somehow obtaining a token for use in later requests?

- If so, how?

- Have I accidentally signed up for something, and not realised I've granted this bot access in the process?

I decided to start by checking the rest of the requests, in case they helped shed some further light.

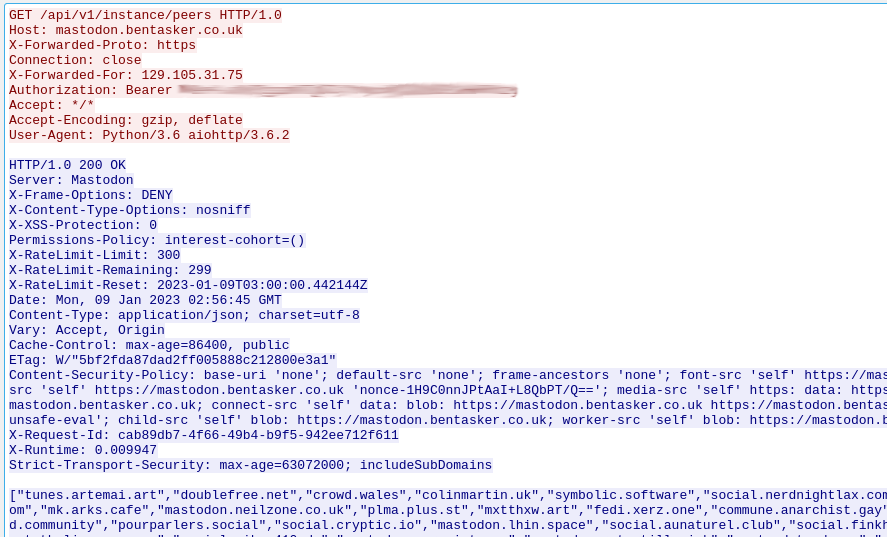

The next request, to /instances/peers (an endpoint which lists every instance your instance has ever seen), includes an Authorization header:

(Ignore the HTTP/1.0, I made a minor boo-boo downstream: Nginx's default for upstream connections is 1.0 and I forgot to override it)

The capture shows that the bot acquired a token from /oauth/token and presented it alongside it's later API requests, something which the Mastodon documentation hints might be wise to do:

The remainder of the requests were all much the same, presenting the same token each time.

The requests which tripped the WAF also use the acquired token

GET /api/v1/timelines/public?limit=80&local=true HTTP/1.0

Host: mastodon.bentasker.co.uk

X-Forwarded-Proto: https

Connection: close

X-Forwarded-For: 129.105.31.75

Authorization: Bearer <redacted_token>

Accept: */*

Accept-Encoding: gzip, deflate

User-Agent: Python/3.6 aiohttp/3.6.2

To summarise, we've got a bunch of requests accompanied by a valid token, itself apparently acquired via a request to /oauth/token.

Which means we need to work out why this bot is being issued with a token in the first place.

Acquiring a Token

The /oauth/token endpoint requires that four parameters be included in the request:

-

grant_type: whether the resulting token will be used at user or application level -

client_id: An OAuth application client id -

client_secret: An OAuth application secret -

redirect_uri: a URL that the user should be redirected to. Can also be set tourn:ietf:wg:oauth:2.0:oobto have the endpoint simply return the token

A successful request to /oauth/token requires some privileged information - an application secret - in order to be provided with a token. And yet, the logs show that the bot is getting a positive response: they've either got a secret, or have found a way to exploit the endpoint.

Looking at the request in the packet capture, we can see that it's the former:

The bot is providing both client_id and client_secret, Mastodon obviously considers them valid, because it's returning a token.

This is a concern, but receiving a token doesn't automatically mean that it is useful. We've already seen that the token isn't valid when tested by my WAF script's probes, so it might be that the token is valid but unable to escalate privileges above "public" (those achieved by a request without a token).

That's something to look into later though, the next thing to do, is to figure out how it is that the bot is able to provide valid app credentials in the first place. Have I perhaps enabled an OAuth application that does something shady?

Finding the Linked Application

Mastodon, to my knowledge, doesn't provide a way to search registered applications by client id.

At this point, I didn't know what table the id was likely to appear in, so rather than spending time looking I took a lazy approach and ran a backup of PostgreSQL:

docker exec postgres pg_dumpall -U postgres | gzip > postgres_backup.sql.gz

And then grepped the dump for the client id I'd taken from the packet capture

zgrep "<client_id>" postgres_backup.sql.gz

This returned a line (I've added the table headers back in for ease of reading):

--

-- Data for Name: oauth_applications; Type: TABLE DATA; Schema: public; Owner: mastodon

--

COPY public.oauth_applications (id, name, uid, secret, redirect_uri, scopes, created_at, updated_at, superapp, website, owner_type, owner_id, confidential) FROM stdin;

5 CDSC Reader <redacted_id> <redacted_secret> urn:ietf:wg:oauth:2.0:oob read

2022-11-21 03:43:52.591579 2022-11-21 03:43:52.591579 f <redacted_url> \N \N t

Note: I've redacted the application's URL in the snippet above because it points to a page providing information useful for contacting the bot's author and I don't want an angry hoarde to descend onto them. I did contact the author, more on that in a bit.

Searching the backup revealed that there's an application with that client_id in Mastodon's database. How did that get there??

It's possible that I screwed up at some point and unknowingly authorised it, but something, didn't quite smell right.

The order that the apps are listed in the table indicates that this isn't something nasty like a default app that's been quietly included in Mastodon (it appears after applications that I know I enabled post-install):

Web

Mastodon for Android

Tusky

Tusky

CDSC Reader <----- this one

WDC Mastodon

The other apps are all ones which I've added by linking mobile clients on my phone etc.

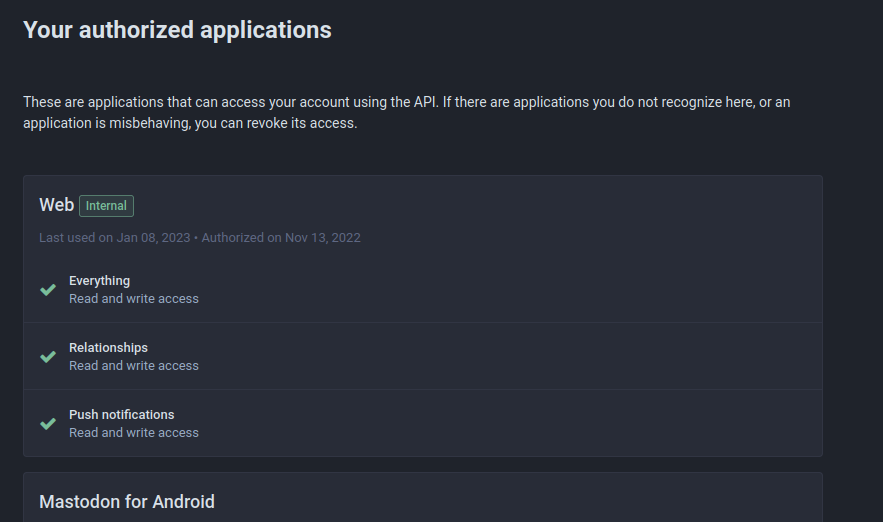

Mastodon's UI includes a configuration view that users can use to manage and view their linked applications (Preferences -> Account -> Authorized Apps)

But... the CDSC Reader app isn't listed there (I logged into my other accounts on that instance to check those too).

The metadata doesn't quite add up either: The entry in the database contains two timestamps (created and updated), both showing 2022-11-21T03:43:52Z. It's true that I do sometimes have issues sleeping, but I generally stay off social media overnight, so it seems quite unlikely that I'd be authorising anything at 4am.

Luckily, this is something that can be checked: The 21st of November is less than 90 days ago, within the retention period of some of my (admittedly less detailed) logs.

There are no log lines at this time suggesting that I was active, but there is a visit by the bot:

129.105.31.75 [21/Nov/2022:03:43:52 +0000] "POST /api/v1/apps HTTP/1.1" 200 331 "Python/3.6 aiohttp/3.6.2" "mastodon.bentasker.co.uk" 0.025

The endpoint /api/v1/apps is used to Create an application and (surprisingly) doesn't require authentication.

The bot simply turned up and... created it's own app.

With this knowledge, we can now easily replicate the bot's behaviour with curl, creating an app and then using its credentials to obtain an API token

# Create the app

curl -v \

-X POST \

-d "client_name=benstestApp" \

-d "redirect_uris=http://somewhere" \

-d "scopes=read" \

-d "website=https://www.bentasker.co.uk" \

https://mastodon.bentasker.co.uk/api/v1/apps

# Take secret and id from output of the above command

curl -v \

-X POST \

--data-urlencode "grant_type=client_credentials" \

--data-urlencode "client_id=$CLIENT_ID" \

--data-urlencode "client_secret=$CLIENT_SECRET" \

--data-urlencode "redirect_url=urn:ietf:wg:oauth:2.0:oob" \

"https://mastodon.bentasker.co.uk/oauth/token"

The response body of the second request provides an access token

{"access_token":"<redacted_token>","token_type":"Bearer","scope":"read","created_at":1673257281}

We can then use the verify_credentials endpoint to confirm that the token's valid

curl -v \

-H "Authorization: Bearer <redacted_token>" \

"https://mastodon.bentasker.co.uk/api/v1/apps/verify_credentials"

It is.

Is The Token Useful?

As I mentioned earlier, provision of a token doesn't equate to provision of a useful token.

The token is valid, but does it actually allow us to gain access to anything that we couldn't access without it?

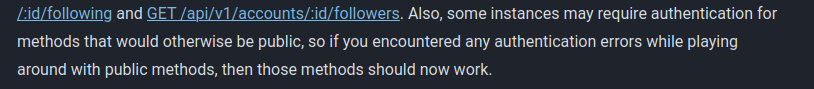

As we saw earlier, the docs do hint that this may be a possibility

With our authenticated client application, we can view relations of an account with GET /api/v1/accounts/:id/following and GET /api/v1/accounts/:id/followers. Also, some instances may require authentication for methods that would otherwise be public, so if you encountered any authentication errors while playing around with public methods, then those methods should now work.

The paths mentioned in that snippet (/api/v1/accounts/:id/followers and /api/v1/accounts/:id/following) cannot be used with the application token alone, it needs to have been linked to a user account.

I thought that the token might perhaps be useful for endpoints that are otherwise protected by unticking Allow unauthenticated access to public timelines (i.e. those I was concerned with in my previous psot), but these also deny the request.

In fact, based on a quickly hacked together probe, there don't seem to be any meaningful endpoints (i.e. those which provide access to activity) which act differently in a with the generated token than without one.

Certainly, none of the endpoints that this bot is seen hitting in logs seem to behave any differently.

At most, the error returned by some endpoints switches from complaining about a lack of token to complaining that the token isn't user level

{"error":"This method requires an authenticated user"}

My Ruby's not great, but a quick review of base_controller.rb and associated files also didn't reveal any obvious paths to making this token useful.

Contacting The Author

If the token genuinely doesn't elevate access, a fairly large question is raised: "why create the application entry in the first place?"

The only answer that I could come up with was that perhaps something had changed between Mastodon 3.x and 4.x and that it used to convey some form of benefit.

The bot's author provides contact information when registering the app, so I decided to contact them and ask.

As it turns out I was overthinking it (who, moi?) and the application isn't created for technical reasons at all.

The author was trying to do the right thing: they wanted their requests to be linked back to an app in order to make it clear where the requests are coming from (providing some transparency and accountability).

They've said that they're going to update the bot's user-agent to better surface this information.

Mastodon

As it turns out, the fact that arbitrary apps can be added to Mastodon's database is already known, but seems not to be seen as being too big of a deal.

There are some reasonable arguments made in that issue for why it's not too problematic, but I tend to lean towards this still being something which requires a fix (albeit, not urgently).

The GitHub issue highlights one issue with this form of open registration

So, any "bad" guy can register infinite number of application on my server, or I need to close this URL path by Nginx.

The idea that someone can arbitrarily fill your storage space is fairly problematic, but there are mitigations available to help prevent this (rate limits etc), as well as much more convenient and effective options for those looking to DoS an instance.

However, I think the issue fails to acknowledge some larger issues:

- There's a lack of visibility for admins: there's nothing in the Mastodon UI which can be used to even see that this has been happening

- As we've seen, having a valid

client_idandclient_secretallows an API token to be obtained - Those tokens allow unauthorised traffic to masquerade as authorised

Lack of Visibility

The lack of visibility is quite concerning and is part of a wider issue with Mastodon not always giving administrators sufficient insight into what's going on with their instance.

Admins are broadly responsible for looking after the experience of their userbase, which (up to a point) includes looking out for their privacy.

The fact that an instance admin can't use the UI to see which apps are registered in the database conflicts with this.

If it were suddenly announced that an app called AcmeEvil had been observed doing $evil, admins wouldn't be able to see whether it had registered on their instance unless they were comfortable querying PostgreSQL directly.

It feels quite contradictory to expect instance admins to act responsibly on their user's behalf, but not provide them with insight or control over what actually uses their instance.

An example of how this might be misused is Facebook's Cambridge Analytica scandal. CA's product was a linked application which claimed to provide some utility, but also used the linked user's privileges to collect/spy on other user's behaviour.

Currently, an unscrupulous app could do the same with Mastodon and instance administrators wouldn't even be able to see that it's registered.

App Registration Allows A Token To Be Obtained

Although currently the issued tokens appear to be of limited use, them being availabile at all leaves a door open for future problems.

For example, a number of API endpoints implement security checks in the following way

before_action -> { authorize_if_got_token! :read, :'read:statuses' }

When I first saw this, I was concerned that the name implied that it only checked for the presence of a valid token. Which, thankfully (and sanely), it doesn't.

It's not beyond the bounds of possibility, though, that during a future refactor, a developer might take the name as read and change the check so that it only checks presence. With one simple (and easily understandable) mistake, app-issued tokens would become much more powerful.

Obviously, that's a fairly extreme example, but it's far from the only mistake which could be made and a simple mistake defining a scope could also be sufficient to cause issues.

Such an eventuality is made worse by the fact that there are entirely unknown and untrusted apps involved.

The intended workflow always involves some level of trust (even if misplaced), because it requires that a user/admin tell an app to link to their account on instance xyz.

That's not the case with self-registering bots, the code (and its author) are entirely unknown to admins and users alike (who in fact, probably don't even know of the app's presence), so there's absolutely no way to know whether any of the application credentials are being appropriately stored - conceptually, they could even be being written to pastebin posts.

Allows Illegitimate Traffic To Masquerade

The third concern intersects a little with the second, because the ability to acquire (superficially) valid tokens allows the bot to add a veneer of respectability to its requests.

Admins looking to improve the security of their instance might choose to put a Web Application Firewall (WAF) in front of their instance.

As I demonstrated in a previous post, in order to add additional protections, they might choose to have the WAF make an upstream request to verify provided tokens are valid.

Logically, it would make sense to use /api/v1/apps/verify_credentials in order to verify that a token is valid, but doing so would lead to the WAF believing the request was legitimate and allowing it through. It really is nothing but blind luck that I used the Suggestions endpoint instead, otherwise I wouldn't have noticed this bot in the way that I did.

It's not just WAF rules either, other techniques used as part of a defence-in-depth approach are also affected: anyone using log analysis might look at whether a logged request included authentication credentials, potentially allowing this bot's activity to pass the smell test.

Defences

Unfortunately, the options available for preventing arbitrary creation of apps really are quite limited.

For tiny instances, the /api/v1/apps endpoint can be blocked at the webserver level

location /api/v1/apps {

return 403;

}

But this really isn't particularly convenient: if you later want to connect a new app, you'll first need to re-edit this config and unblock the path.

Blocking the path at all, though, just won't be a viable option for most larger instance admins: users expect to be able to connect apps, which is reliant on this path being available and unauthenticated.

Without some kind of fix in Mastodon itself (it's hard to envisage what such a fix might look like, at least without breaking existing apps), the only viable option is implementing detection rather than prevention.

My WAF ruleset is an easy example of this: without its reports, I likely wouldn't have even looked at most of the things in this post.

Another simpler route is to write a script to periodically send a report of newly registered apps:

#!/bin/bash

#

# Query Mastodon's database and look for newly created apps

#

POSTGRES="docker exec postgres psql -U postgres -d mastodon"

# Who are we emailing?

DEST="me@example.invalid"

# Run the query

RESP=`$POSTGRES -c "SELECT id, name, redirect_uri, scopes, created_at, updated_at, superapp, website FROM public.oauth_applications WHERE created_at > now() - interval '24 hour' "`

# Any results?

if [ "`echo "${RESP}" | tail -n1`" == "(0 rows)" ]

then

# No results

exit

fi

# Send a mail

cat << EOM | mail -s "New Mastodon apps registered on $HOSTNAME" $DEST

Greetings

Newly registered apps have been seen in the Mastodon database on $HOSTNAME

$RESP

You can extract additional information on each of these by taking the ID and running

SELECT * FROM public.oauth_applications WHERE id=<id>

In Postgres

EOM

It can then be scheduled to run daily in cron with something like

0 1 * * * /path/to/cronscript.sh

However, I monitor Mastodon with Telegraf so rather than creating a cron-script I added some config to call Telegraf's postgresql_extensible input plugin

[[inputs.postgresql_extensible]]

interval = "15m"

address = "host=db user=postgres sslmode=disable dbname=mastodon"

outputaddress = "postgres-db01"

name_override="mastodon_oauth_applications"

[[inputs.postgresql_extensible.query]]

sqlquery="SELECT id, name, redirect_uri, scopes, created_at, updated_at, superapp, website FROM public.oauth_applications WHERE created_at > now() - interval '15 minute'"

withdbname = false

tagvalue = "name,scopes,superapp,website"

(There's a copy of this available here)

This runs every 15 minutes and collects details of applications created in that timeframe. The results are sent on to InfluxDB allowing a TICKscript like the one used for my webmentions notification to collect and email the information.

Both approaches provide some visibility, but are still very far from perfect:

On busy instances, they'll likely be quite noisy because each user install of a mobile app such as Tusky generates it's own App record in postgres. It might be prudent to filter common app names, although this does carry the risk of those names then being used to help hide malicious registrations.

Additionally, like anything capable of sending an automated mail, it would also be possible to misuse it to throw abuse into an instance's admin's mailbox (through crafted app names/URLs etc).

Conclusion

As soon as I saw that the bot seemed to be providing a token, I knew it was going to be interesting to look at.

The bot's author, for their part, took steps to try and increase accountability for the bot's behaviour, providing an audit trail to link requests back. Unfortunately, this actually resulted in a request pattern which - at first glance - is potentially quite alarming.

Mastodon allows arbitrary applications to register themselves, with no admin or user input/visibility.

As well as creating junk records in the database, registered applications can use their credentials to obtain an authentication token for use with Mastodon's API, leaving the instance's security posture entirely reliant on how well Mastodon actually observes and enforces API scopes.

Those tokens can also be used to add an air of authenticity to a request in order to try and bypass third-party defences such as WAFs, access log analysis and request scoring systems.

Mastodon's entire third-party application model is built around this workflow, so as much as I hate to bring problems without solutions, it's quite hard to envisage a fix that's able to remain backwards compatible.

Blocking this particular bot is easy, unfortunately there doesn't seem to be a clear route to prevent future bots from using the same mechanism.

There are mitigations which can be put in place, the most flexible of which are aimed at detection rather than prevention, but all come with drawbacks (including some potential for abuse).