Looking at the Instagram Account Review Process

Less than a week ago, I set up a system to automatically announce new blog posts on Threads, however, it is no longer active.

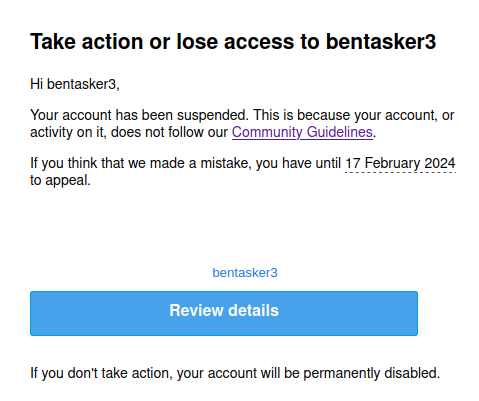

Despite my account having only posted about 4 times (with only one of those being an automatic post by the script above), it got suspended because they felt it wasn't in line with their "Account integrity and authentic identity" rule.

In particular:

We don't allow people on Instagram to create fake accounts.

Initially, I assumed that this was caused by my publishing bot periodically logging in, but a search on the net suggested that others have had similar experiences. Update: they've since issued a cease-and-desist to the library I was using.

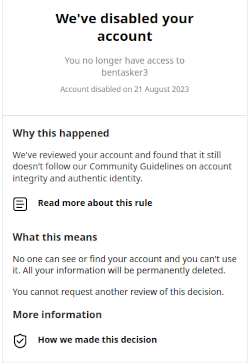

When an account is suspended, the user is given the option to appeal. However, my appeal was rejected and the account is now set to be permanently deleted:

I'm not overly concerned about the loss of my Threads account: in the brief time that I had access, the platform didn't really engage me (and it seems that the same is true for others too), not least because I struggled to find accounts that I actually wanted to follow amongst the noise (in the fediverse, I tend to follow certain hashtags - something that Threads doesn't support at all).

Having now been through the Threads appeal process, I wanted to write down some of my observations about the detection and appeal process that Instagram/Threads follow, because (IMO) it's overly invasive and potentially quite deeply flawed.

Your Profile Is One Mistake Away from Deletion

Instagram and Threads are tied to one-another - you need an Instagram account to access threads, so I created an Instagram account specifically because I wanted to be able to use Threads. As a result, my Instagram profile was completely empty and I've lost very little.

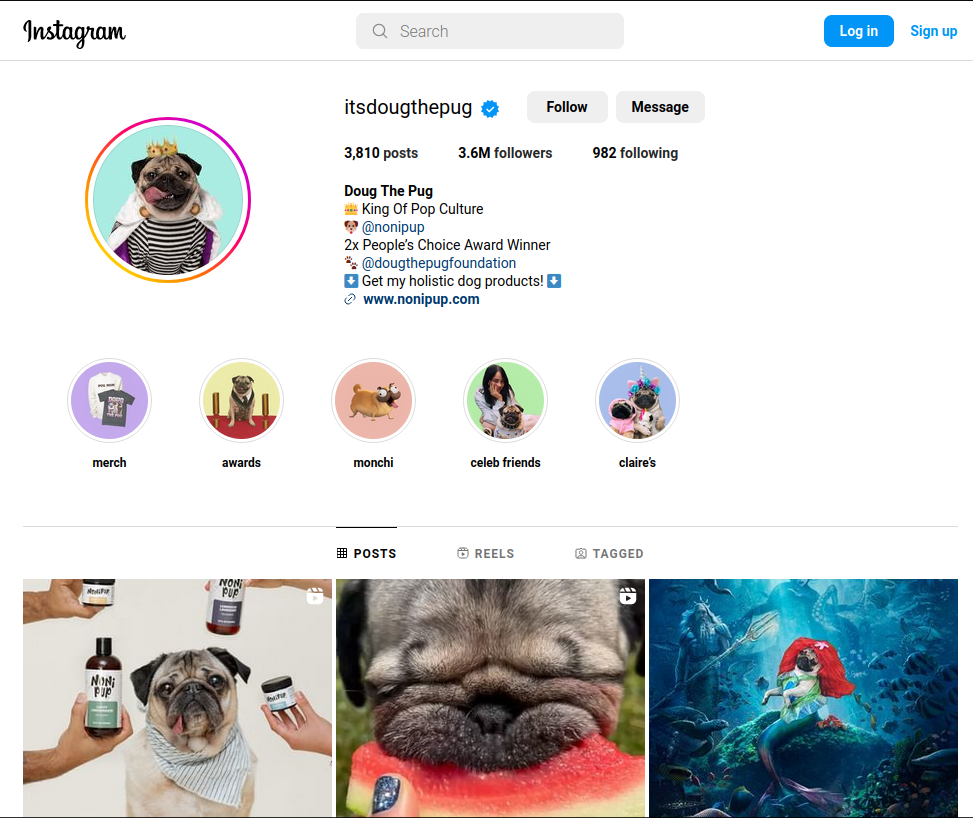

Normal Instagram users though, put quite a lot of effort into building their profile and curating various photos over periods of years:

Although Instagram's not really my thing, a lot of profiles clearly have had a lot of effort, time and skill invested into them.

However, all that work is one mistake (and, not necessarily the user's) away from being wiped completely.

As soon as an account is suspended, the content that it holds becomes inaccessible, even to the affected user. The profile and its contents stop being publicly available and logging in just redirects the user to a page telling them that they've been suspended, with the option to start a review of the decision: there's no way for them to download and preserve the fruits of their work.

If the request for a review fails, then that's it: There is no path for requesting a second review and all of the user's content will ultimately be deleted (at least, so far as Meta can be trusted to actually delete anything).

The Review Process

Logging in after a suspension prompts the user to start the review process.

In true Meta style, the process is a personal data hoover, requiring the user to

- Complete a Captcha

- Provide and verify an email address

- Provide and verify a phone number

- Take and provide a selfie holding a piece of paper

The user isn't told, before starting, what the process consists of and each step looks like it's the final, only to then be followed by another request (if that methodology sounds familiar, it's because it's a technique that scammers use - really not a great look).

When a phone number is requested, the screen notes that Instagram won't use it for other purposes but that, if it's been provided on other Meta properties, it will be used for advertising etc. That's probably meant to say that provision doesn't change any pre-existing behaviour, but it's wrapped up in the sort of opaque wording that Facebook are known for.

The Selfie has some requirements attached to it

- You're given a number to write on a piece of paper

- Your face, the paper and your hand must be visible in the selfie

There's no review of supplied information prior to submission, you simply walk through the steps and then are told that you'll normally receive a decision within 24 hours (mine took about 8).

If the review is rejected, it's the end of the account: there is no further route of appeal.

Automated Decisions

In order to lose your account, two things need to happen:

- An initial suspected violation

- A user-launched review needs to fail

In theory, the review acts as a safeguard. In practice, though, it looks like Meta might have decided to automate away the toil

I've written in the past about the problems that occur when Human oversight is removed, and it looks like this may just be yet another example of that.

One of the things that occurred to me, especially when looking at other's reports of their accounts getting suspended, is that Meta's automated processes might not account for the symbiotic relationship between Instagram and Threads.

Before Threads launched, it could be considered fairly unusual to have an Instagram account that was active but had an empty profile. With the advent of Threads though, suddenly there are accounts (like mine) where the Instagram account exists only because it's necessary to gain access to Threads.

If (as seems likely) the automated processes involve machine learning, it may well be that they currently consider an empty profile to be quite a strong signal for abuse.

Selfie Judged Unacceptable

Just after the notification that my review had failed, I received a second email explaining that it was because the selfie wasn't acceptable:

I'm not (quite) stupid enough to publish a picture here of myself holding a piece of paper, but looking at the image in my gallery it looks like it probably failed requirements because my hand slipped out of shot.

That seems like an easy mistake to make and there are a raft of other similar mistakes that could be made (not writing the number clearly enough, covering too much of face with the paper, forgetting to reverse the camera's mirroring of the image etc etc).

Any sane review process should allow re-submission of the selfie: For example, when you apply for a passport, the Passport Office do not say "sorry, photo not acceptable, passport refused", they ask for a new photo that does meet requirements.

Meta's review process, therefore, is fragile: a mistake by the user (or the verifier) means the end of an account because there's no second appeal to detect/rectify mistakes made in the first appeal.

Invasive Verification

It would be remiss of me to write all this and not note this: I cannot say fuck you enough to the fact that Meta require a selfie at all.

This is only made more aggravating by the fact that the review step doesn't provide details of

- The lawful basis under which they're requesting it

- What they'll do with it (is it being fed into AI, will it be retained, etc)

Compelling provision of a phone number is bad enough, but requiring provision of something that can be used to derive biometric information as part of a flawed appeals process really is taking it a step further.

Conclusion

Of course, in the context of a company that has been implicated in genocide, the potential retention of a single selfie is little more than a statistical anomaly. Losing access to Threads also really isn't the end of the world (in fact, as user counts plummet, it's hard not to be reminded of the phrase "And Nothing Of Value Was Lost").

But, I am irritated by the collection of personal data as part of an appeals process that I'm not convinced ever had a chance of success. No information has been provided about what happens to that data following the review and it's hard not to assume that a data-hoarding company is going to, well, hoard.

Of course, even without Meta's reputation, it's not an overly surprising outcome, given that they had to delay the launch of Threads in the EU because of concerns about (forthcoming) strong user protections (not that the DMA doesn't have its own flaws).

Still, I'm left with a few unanswered questions so, although I don't expect that I'll receive a particularly substantive reply, I've written to Meta's data controller to ask:

- Was the selfie treated as biometric information (i.e. were facial recognition techniques applied to it)?

- Now that the appeal has failed, when will the data be deleted?

- Can you delete it sooner and certify that it has been removed?

- On what basis was the appeal rejected (I got a message suggesting the selfie wasn't acceptable, but it didn't say why)?

- Was this decision made by a human?

- Why does meta feel it's acceptable to collect this level of information, but allow (I assume) a single mistake in the selfie end all avenues of account restoration?

Those who actually care about their Instagram accounts should probably be a little concerned that their accounts are a single faulty-review away from being permanently disabled, with no way to subsequently retrieve content.

That's probably all the more likely for those who also use Threads: On Twitter, it wasn't unusual for certain groups to mass-report people that they disagreed with and it's reasonable to believe the same may start to happen on Threads (at least, if it actually retains a userbase) leading to an increased likelihood of suspension.

In fact, I'd be inclined to say that, if you're an Instagram user, it'd probably be wise to create a second Threads specific account to mitigate this risk.