Another blocklist allegedly misused - this time by OnlyFans

Although they're currently only allegations, a claim has arisen that OnlyFans misused a terrorist blocklist to blacklist stars using rival services.

Whilst I appreciate that most of those campaigning for mandatory age-verification are unlikely to feel much sympathy for adult performers, it's important to look beyond the profession of those affected and understand the underlying issues.

The list (ab)used was implemented with the very best of intentions: preventing the dissemination of terrorist recruitment material and propaganda via social media networks.

There's overlap with the Online Safety Bill here, because lists like this are one of the mechanisms which would need to be used to control the flow of content in order to try and comply with the duty of care that the bill imposes.

This isn't the first time that a well-intentioned censorship mechanism has been abused, and (unless human nature changes) it certainly won't be the last.

Rather than simply listing examples, this post is intended to look at the ways by which censorship mechanisms ultimately end up diverging from their stated purposes. For convenience, I'll use the term "misuse" as a substitute for "used in ways not originally intended" - it's not intended to imply judgement over the eventual use.

It's also not my intention to deny that there are wider issues which need to be addressed. It's all too clear that there are serious problems and that many providers aren't doing enough, but we still need to ensure that measures are proportional and effective. Understanding the failure modes of censorship is absolutely essential to that.

Backdoor deals

This article started by talking about the OnlyFan's allegations, so lets continue with those.

The claim is that OnlyFans looked for adult performers who were using the services of competitors (such as FanCentro). They then had those performer's accounts and content added to the terrorist content blacklist that's used/shared by the large social media providers.

The workings of this blocklist, run by the Global Internet Forum to Counter Terrorism (GIFCT), isn't widely disclosed. However it's more than a simple URL blacklist: it uses media fingerprinting techniques to help detect and block different variations of the blocked content.

What this meant is that the performer's social media posts (and sometimes accounts) all but disappeared from view - depriving those performers of revenue, and reducing traffic to OnlyFan's competitors (whilst presumably increasing traffic to OnlyFans itself).

It's important to note that OnlyFans couldn't directly add content to the list, they don't have access to it. The allegation instead is that they bribed someone sufficiently senior at one of the large social media providers to add it for them.

So, if the allegations are true, we've got two sets of abuse here:

- OnlyFans were paying bribes to have adult performers added to a list intended to prevent the dissemination of terrorist propaganda

- whoever they were bribing was abusing their access to that list for personal gain

Both are a clear misuse of an extremely well intended system.

Legal Scope Creep

Onlyfan's alleged actions are underhanded (and likely illegal), but that's not the only way that blocklists end up being misused.

The Internet Watch Foundation maintain a list with an equally laudible aim: preventing the discovery and dissemination of Child Sexual Abuse Material (CSAM).

Sometimes their approach has been questionable and downstream implementations problematic, but it really is hard to criticise their stated aim.

Around the turn of the century, BT developed and added technology (known as Cleanfeed) to their network in order to block access to known CSAM.

It wasn't an easy decision, even BT's then-CEO publicly noted the difficulty in finding a balance between fighting CSAM and maintaining freedom of information.

You are always caught between the desire to tackle child pornography and freedom of information. But I was fed up with not acting on this and always being told that it was techically impossible.

At the time, it was unprecedented and was reported as being "the first mass censorship of the web attempted in a Western democracy".

Cleanfeed consumed the IWF's list and attempted to block access to anything found therein. Although there were some concerns about the implications, the solution was generally accepted as a necessary and proportional response to the increase in online paedo-boards - over time, other ISPs followed suit.

However, in 2011 things changed.

Whilst Cleanfeed had been implemented to block CSAM, it began to be used to block sites that facilitated copyright infringement.

This came about because the Motion Picture Association (MPA) successfully argued that as BT had censorship technology in their network, they could be ordered to use it to block other illegal content (in this case, Newzbin2). It was a landmark case, and brought in an era of National Censorship that still exists over a decade later.

The technology was implemented (and gained public consent) in order to address the worst of the worst, but through legal process started to be used for an entirely different purpose. In this example, it was via the courts and existing law, but there is no reason it could not also occur as a result of Government action.

Ineptitude

Sometimes censorship apparatus ends up being misused, not because of deliberate action, but because the operators or the systems themselves are inept.

The simplest example of this is the Scunthorpe problem. Poorly operated filters identify the word cunt within the town name Scunthorpe, and block it. Other names, like Essex, Clitheoroe and Penistone are similarly impacted.

Wikipedia notes quite a number of examples, including

- Jeff Gold tried to register the domain

shitakemushrooms.combut was blocked by InterNIC's profanity filter - Craig Cockburn had issues with his work email, because his surname contains

cockand his job titleSoftware Specialistcontains the stringcialis - The educational site

RomansInSussex.co.ukwas unreachable from many educational institutions becausesexappears in the domain name -

Whakatane's municipal Wifi blocked the town's own name, because their "advanced" filter decided that

Whaksounds sufficiently similar tofuck - China blocked searches for the name

Jiangto squash rumours that Jiang Zemin had died.Jiangalso means river, so searches for rivers started returning the block message - Google attempted to address an issue with Google shopping listing firearms, as a result, searches for

Glue Guns,Gun's n RosesandBurgundy Winewere inadvertently blocked - News site profanity filters changing people's names (

Tyson GaybecameTyson Homoesexual), as well as changing things likeclassictoclbuttic - The Pokemon

Cofagriguscould not be traded because it's name contains the substringfag - Facebook blocked UK users who used the term

faggotwhilst talking about their dinner - Political debate about

Dominic Cummingswas stymied on Twitter because thecumin his name triggered filters - Facebook muted and banned users for misogny and bullying, because they were talking about Plymouth Hoe

Some of these might seem amusing (because they are), but there's an common theme: lots of things, in all aspects of our online lives, end up being erroneously censored by systems that someone put in place to do something good.

It's worth noting too that, despite having significant resources at their disposal, the large social media platforms still feature in this list. Despite having money and skills to throw at it, they are far from immune to making this kind of mistake.

Accidental overblocking doesn't just apply to words either.

In 2013 it was discovered that more than 1000 websites were accidentally blocked in Australia. This occurred because, following an order from the Australian Securities Commission (ASIC), the IP address they shared was blocked. ASIC had been attempting to target a single fraudulent website and hadn't properly considered the implications. ASIC later revealed that in the previous 12 months they'd inadvertently censored 1000 other sites and 249,000 domains despite only actually having used their powers 10 times.

For some time, my site was inaccessible in Russia for a similar reason. I'd not been specifically targeted, but because some of the nodes I served it from briefly shared a provider with Telegram. The Russian comms regulator, Roskomnadzor, ordered ISPs to block a wide array of netblocks some of which rendered my site inaccessible from Russia.

General WTFerry

Sometimes, there just isn't a good specific category to put things into, because there's just no good explanation for them, so you're left with a catch-all.

The story of Australia's attempt at a national firewall (The Great Australian Firewall) falls quite firmly into this category.

Even before the attempts to implement censorship "for the greater good", Australia's government had a less than stellar relationship with online censorship. For example, in 2006 they had forcibly removed a spoof website carrying a transcript of the Prime Minister apologising for the Iraq War.

Despite this, though, the Australian Communications and Media Authority (ACMA) was empowered to create a blocklist of sites carrying content ranging from CSAM to media that would've been refused a classification in Australia. Presented by Stephen Conroy (at the time the minister for Communications), the filter, it was claimed, would protect Australians from the most objectionable content on the web.

In 2009 though, the ACMA blocklist was leaked to Wikileaks and was found to contain a surprising array of sites, including:

- Online poker sites

- Fetish sites

- Satanic and Christian sites

- Pornography sites of various orientations

- Philip Nitschke's work discussing euthanasia

- The website of a Queensland dentist

More than half of the sites blocked failed to fall within a strict definition of the categories that the ACMA blocklist had originally been sold as targetting. Further questioning by the media revealed that ACMA had, to some extent, taken the view they had been given carte blanche around what went onto the list.

No explanation was ever publicly given for how the unfortunate Dentist found their way onto the list.

The Unintended Consequences of Censorship

We've explored some of the ways in which censorship apparatus ends up being misused and seen some of the impact, but censorship also tends to lead to other unintended consequences.

The most common, ironically, is the unwanted spread of information relating to the censorship mechanism itself.

The perversion of Cleanfeed into an anti-copyright-infringement tool had a secondary impact, one also seen following ACMA's blocking of anything discussing euthanasia.

Both created a (much) more socially acceptable reason for seeking to learn how to circumvent the censorship.

In 2006's UK, if you were asking how to bypass Cleanfeed it could only be because you wanted to be able to access child sexual abuse material. As a result, knowledge on how to circumvent it was quite well contained: to publicly seek that knowledge would be to publicly out yourself as a potential predator, and publicly disclosing that information (however you came by it) would only assist those predators.

2011 changed things: the ruling meant that there was suddenly a much wider userbase interested in bypassing Cleanfeed. CSAM seekers could hide amongst them, seeking assistance under the pretext of trying to regain access to Newzbin2 (and other blocked sites).

After ACMA blocked access to discussions of euthanasia, groups wanting to foster discussion/debate of the topic held seminars around Australia teaching users how to circumvent censorship through use of proxies and VPNs.

Whilst copyright infringement isn't OK, and euthanasia is a controversial topic, neither attracts the same level of stigma and hate as seeking out CSAM. Fear of that same stigma helped to contain the knowledge and prolong the effectiveness of Cleanfeed, but once it was diluted, there was no putting the genie back into the bottle.

As a direct result of the 2011 ruling, knowledge on how to bypass Cleanfeed like systems is now extremely widespread, to the extent that people tend to know the techniques without necessarily even knowing of Cleanfeed's existence. This development isn't a surprise, or something that could only be known with hindsight, it was so predictable a consequence that I wrote about it at the time.

The future of the terrorist blacklist

That OnlyFans were allegedly able to manipulate the GIFCT list is disappointing but (given the history of internet censorship) not particularly surprising.

It was inevitable that, at some point, the list was going to be misused. The only real surprise was that it appears to have happened via the backdoor first.

The history of Cleanfeed teaches us that if a technology is in place, it can be co-opted (and extended) via Government or Justice system.

Sometimes, that happens because those in power simply don't understand the systems they're referring to - something aptly demonstrated a few years back by then Home Secretary, Amber Rudd.

The GIFCT list is far more advanced than the URL blocklists of old - it's designed to be able to cope with an adversary who tries to circumvent it by slightly modifying images/videos. Whilst technologically impressive, broad applications like this do also tend to have a way of making sure that mistakes are much, much more far reaching, even before staff start accepting bribes to add inappropriate material onto them.

Whether the OnlyFans allegations are true or not, it's likely that similar overblocks will happen again in future (and may already have done so). The question, as with all secret lists, is whether we'll ever actually hear about it.

The future of UK Internet Censorship

Like so many of the projects we've mentioned in this post, the UK's Online Safety Bill has laudible aims: it wants to ensure that children and vulnerable adults are kept safe online.

One of the measures being driven as part of that is mandatory age verification on any service that might contain age inappropriate content: porn sites, social media etc. Providers will be given a "duty of care" and made responsible for ensuring that the content they're serving UK users is age appropriate - which is a way of saying that age-verification will be everywhere, without directly mandating that it must be.

If this sounds familiar, it's because similar age-verification checks were previously planned, but were dropped in 2019 when they came into contact with reality (not least the realisation that kids see and share porn on social media, something I wrote about back in 2011).

Unfortunately, the Government's inept handling of the whole matter led to the creation of an industry (the Age Verification Providers) who were then deprived of customers (because their captive user-base was never provided to them). So, this time round, there's a more significant lobbying force behind some of the measures.

There are numerous issues with the entire age-verification concept, but the reason I've mentioned age verification at all, is because the proposed measures inherently rely on the implementation and threat of censorship.

Without that threat, the proposed measures are toothless against any non-UK website: any website not wanting to absorb the ongoing cost/hassle/data protection risks of age-verification could simply ignore the requirement.

The intended way to address this is to empower Ofcom to order the blocking of domains that refuse to comply - theoretically keeping UK users safe, because the service will either have verified their age or won't be reachable at all.

History shows, though, that we end up less well off where censorship is present.

Even the Government's own research found that there were likely to be serious unintended consequences, noting that

There is also the risk that both adults and children may be pushed towards ToR where they could be exposed to illegal activities and more extreme material

I wrote earlier about how censorship scope creep often leads to the dissemination of knowledge on circumvention, but this is something different.

The Governments own assessment found that the designed implementation could have that effect and increase the very harm it was intended to prevent against.

Before even a single domain is blocked by Ofcom, implementation of the "protective" measures could lead children and vulnerable adults to circumventing all network level protections. They won't just be circumventing the age-checks, they'll be bypassing Cleanfeed and it's brethren. Although the impact assessment specifically mentions Tor, this is true of whatever circumvention method is used, whether that's VPNs, proxies or even the sneakernet.

The Age Verification requirements of the Digital Economy Act were shelved at the very last minute because it became clear that they simply weren't workable. Despite that (and the embarassing rejection of the earlier mandatory web filters), a more extreme version of the same ideas has been resurrected with very little attempt to address the earlier flaws.

This iterative approach suggests that, should some of the measures in the Online Harms Bill come into being, we should expect to see ever tightening restrictions as the Governments of the day come under pressure to try and fix the many, many circumvention vectors that exist, eventually culminating in suggestions that Tor and VPNs should be blocked.

The Most Impacted are the Least Targeted

In almost any censorship system, there's an uncomfortable truth: those who are most heavily targeted also tend to be the least affected, particularly in the long term.

By 2010, Cleanfeed essentially existed only to prevent accidental discovery of CSAM. Those who actively sought out CSAM were already unimpeded by Cleanfeed having long since found ways around it (Freenet, proxies, VPNs etc etc). As a result, the only users really affected by Cleanfeed were those who weren't looking for CSAM but were trying to access something that had been overblocked (like Wikipedia or the Internet Archive).

This fundamental flaw doesn't just apply to censorship either: in fact, it's best example is actually found in the efforts of the copyright lobby.

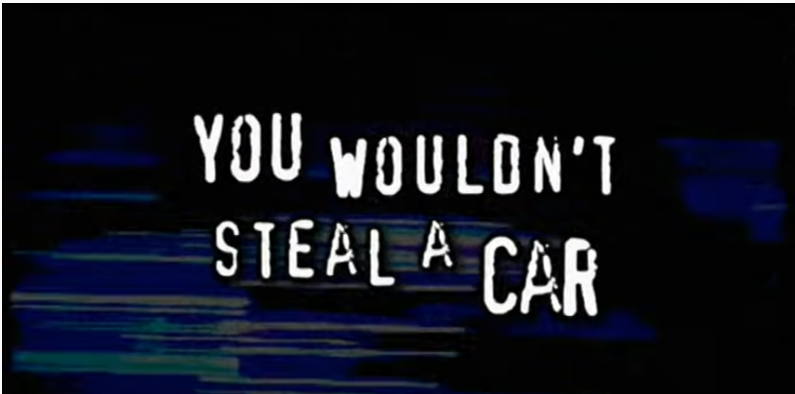

Most people are probably familiar with the unskippable anti-piracy adverts on DVDs/BluRays (the most famous example being the "You wouldn't steal a car" ad so beautifully lampooned by The IT Crowd).

The one type of media consumer that doesn't see those ads? Pirates.

Those adverts get stripped from pirated copies (along with all the other ads), so the only people who have to sit through them are those who are actually already paying for content. The technical control that prevents you skipping them only affects those who are doing the right thing.

Those targeted by the ads never actually see them, whilst everyone else (the least targeted) has to sit through them every time they want to play a DVD.

Conclusion

In this post, I've focused explicitly on the technical side of censorship - how it's implemented and what goes wrong as a result.

There are many other issues around the Online Safety Bill, including that it's poor wording around "duty of care" may lead to censorship of LGBT people, it's wording will cause chilling effects as a result of platforms being overly cautious, and the data-protection headaches inherent in an effective age verification process.

Each of those topics are worthy of articles in their own right, so I'll not try and do them justice here.

Many of those who support the Age Verification aspects of the Online Safety Bill are, I suspect, unlikely to feel very much sympathy for the adult performers (and fans) impacted by OnlyFan's alleged behaviour. But, regardless of who was impacted, it's a symptom of a much bigger problem: mandated online censorship is inevitably used in a way not intended by those who first supported it.

The behaviour alleged of OnlyFans would be an example of outright abuse and corruption, but there are many examples of systems being perverted through other means, whether that's legal process or plain ineptitude. Whilst there may be a temptation to say "it couldn't happen here", almost every example given in this post occurred within a democratic state: it did happen here, or somewhere like here.

Censorship via legal process might sound benign (desirable, even), but it's not always so.

Whilst we might all have enjoyed watching Telegram frustrate Roskomnadzor, their attempts at blocking came about as the result of legal process. It was Russia's courts, not their Government who ordered the block and the result was a huge amount of collateral blocking (with Telegram itself remaining fairly unimpacted).

Despite the fairly broad categories of screw-up that can be applied to online censorship, you're still left with a need for "everything else" to account for oddities like Australia's exploration of building a national firewall.

The idea that it's possible to build an effective censorship mechanism that won't fall prey to abuse, scope creep or incompetence is, at best, extremely naive.

The impacts of censorship are always more widespread than initially intended, and the harms further-reaching. At best, it develops a circle of self-defeat, where overreaching censorship leads to widespread dissemination of information on how to thwart it (something that happens even in authoritarian countries). At worst, it becomes a tool of oppression for tomorrows government, censoring far more than was ever intended.

Many of the measures described by the Online Safety Bill simply cannot function without the threat of censorship - it's the stick that the Government must wield in order to try and force compliance by sites based overseas.

It's inevitable though that those blocking powers will be misused. Today, they're sold as protecting the vulnerable, but tomorrow it will be used to block something else - if we're extremely lucky, then it might just be a small dentist's office in one City...