My Own Little HeartBleed Headache

When the HeartBleed bug was unveiled, I checked all of my servers to see whether they were running vulnerable versions. They weren't, but once the patched versions were released it seemed a good juncture to test and roll out the update to one server.

What followed was something of a headache, initially with all the markings of a serious compromise.

Having now identified and resolved the root cause, I thought I'd write a post about it so that others seeing similar behaviour can get something of a headstart.

In response to threats such as CDorked, I run PHP Changed Binaries on all my servers, so any file in PATH is checked (daily) for changes, based on a cryptographic checksum. If any changes are detected, an alert is raised so that I can investigate the cause of the change.

The day after I updated OpenSSL, I started receiving alerts for a wide variety of files (I'd updated hashes following the update of OpenSSL)

Changed Files

So the first thing to look at was which files had changed, and what it could potentially indicate.

2014-04-11 00:30:22: Changed Binaries output follows

0 Security alerts

45 Alarms

0 Warnings

1350 Information messages

ALARMS

/usr/bin/snmpdelta has changed

/usr/bin/snmpnetstat has changed

/usr/bin/php-cgi has changed

/usr/bin/ftpdctl has changed

/usr/bin/ncat has changed

/usr/bin/encode_keychange has changed

/usr/bin/reporter-kerneloops has changed

/usr/bin/snmpbulkwalk has changed

/usr/bin/mysql has changed

/usr/bin/mysqlslap has changed

/usr/bin/snmpget has changed

/usr/bin/mysqlimport has changed

/usr/bin/reporter-rhtsupport has changed

/usr/bin/fipshmac has changed

/usr/bin/mysqlcheck has changed

/usr/bin/snmptable has changed

/usr/bin/php has changed

/usr/bin/nsupdate has changed

/usr/bin/dig has changed

/usr/bin/fipscheck has changed

/usr/bin/snmpusm has changed

/usr/bin/nmap has changed

/usr/bin/curl has changed

/usr/bin/snmpgetnext has changed

/usr/bin/snmpset has changed

/usr/bin/mysqlbinlog has changed

/usr/bin/snmptest has changed

/usr/bin/snmpvacm has changed

/usr/bin/mysqladmin has changed

/usr/bin/snmpdf has changed

/usr/bin/host has changed

/usr/bin/nslookup has changed

/usr/bin/snmpbulkget has changed

/usr/bin/snmptrap has changed

/usr/bin/nping has changed

/usr/bin/wget has changed

/usr/bin/reporter-upload has changed

/usr/bin/snmptranslate has changed

/usr/bin/snmpinform has changed

/usr/bin/mysql_upgrade has changed

/usr/bin/mysqltest has changed

/usr/bin/snmpstatus has changed

/usr/bin/snmpwalk has changed

/usr/bin/mysqlshow has changed

/usr/bin/mysqldump has changed

Report ends

The very first thing that should be obvious, is that to change these files, you need to be root.

The second thing that stands out is that a number of the items that have changed are tools you might use when trying to identify whether you've been compromised, or use to monitor the server.

Oh shit.....

Basic Checks

The first thing I did was to hop onto the server, run a fresh install of RKHunter and Clamav, and run a check. Nothing......

Checking the modification times of the files listed yielded nothing, they were all months or more ago.

So, as a test case, I re-installed curl

yum reinstall curl

But at the next check interval, it started alarming. Even worse, my install of ClamAV had started alarming too....

0 Security alerts

5 Alarms

0 Warnings

1927 Information messages

ALARMS

/usr/bin/curl has changed

/usr/bin/clambc has changed

/usr/bin/sigtool has changed

/usr/bin/clamscan has changed

/usr/bin/freshclam has changed

So now one of my security tools has been tampered with. Not good.....

In the meantime, I'd been pouring over logs looking for any sign of a compromise, finding nothing to indicate a successful intrusion. Yet important files were still changing with no input from me.

Taking Stock

I stopped at this point to consider the possible causes, and decided that either;

- I was missing something important, or

- A bug in my monitoring (perhaps unveiled by the OpenSSL update?), or

- The system had been compromised by an advanced attacker.

The latter seemed to be a real possibility;

- There were unexplained changes being made to import items on the system,

- mtimes were unchanged (so had perhaps been tampered with)

- there was no sign of a compromise in the logs (so they'd perhaps been tampered with)

- security tools I'd installed were being tampered with

I could still be missing something, but as this is security, it's best to proceed on the basis of the worst case scenario and hope to get a pleasant surprise!

Assessing the Changes

Although finding out how the changes occurred was important, assessing the impact of the changes was equally important. What changes were actually being made? Were they malicious? (and would they give me a clue as to what was going on?).

So, I set-up a small honeytrap, re-installing a number of the affected items so that I could examine the changes being made. With copies of the unmodified binaries stored safely off-server I waited for the next alarm, which occurred that night.

Time to see what changes had been made (again, using curl as an example)

# md5sum /usr/bin/curl

9a164ef0323ee15b7df712a509ca3eb1 /usr/bin/curl

# md5sum /root/.retain/curl.unmodified

19a3dc257627a4fa3c9ff4c98e5607a7 /root/.retain/curl.unmodified

# ls -s /usr/bin/curl

132 /usr/bin/curl

# ls -s /root/.retain/curl.unmodified

120 /root/.retain/curl.unmodified

The files definitely differed (ruling out the small possibility of this whole thing being a bug in ChangedBinaries).

So I dumped both to assembler to see what's changed

# objdump -d /root/.retain/curl.unmodified > unmodified

# objdump -d /usr/bin/curl > after

# diff -u unmodified after

— unmodified

+++ after

@@ -1,5 +1,5 @@

-/root/.retain/unmodified: file format elf64-x86-64

+/usr/bin/curl: file format elf64-x86-64

Disassembly of section .init:

Whilst the files themselves have changed (as evidenced by the checksums), what they do (and how they function) is unchanged (as evidenced by the empty diff).

Re-assuring, but decidedly odd....

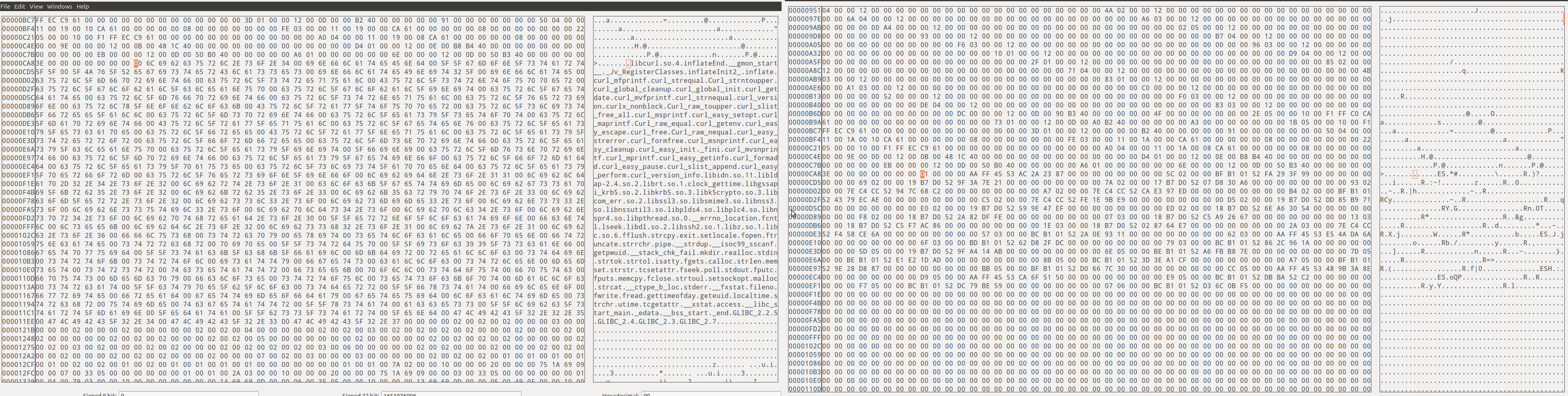

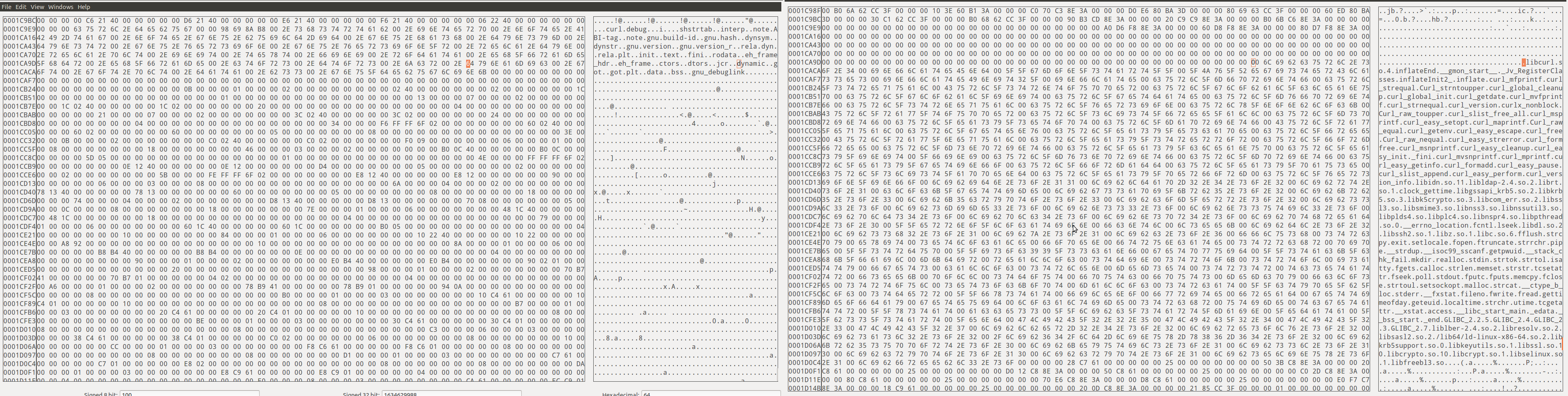

What had actually changed then? I dumped to Hex to try and find out

# hexdump /usr/bin/curl > after.hex

# hexdump /root/.retain/curl.unmodified > unmodified.hex

# diff -u after.hex unmodified.hex

A large number of changes were output (far too many lines to paste into this post!), so although only 12 bytes had been added to the file, other changes had been made (though curiously none affecting the output of dis-assembling)

I needed to find out what was actually being changed, so I fired up hexdiff to look at the difference (difference shown here in GHex - it's just easier to read in screenshots). The changes that had been made were at the beginning and end of the files.

In each screenshot, the modified version is on the right (click to enlarge);

Strange..... and every alerting item I checked showed the same - no change in functionality, just modification to the positioning of libraries.

Considering Possible Explanations

Although strange, the changes being made didn't appear to be malicious, so it was unlikely to be the result of an attacker trying to cover their tracks (or worse).

Although I considered it unlikely, it was also possible that my webhost was doing something on the back-end storage (some form of de-duplication perhaps?).

Although I couldn't quite rationalise it, I figured it was best to just ask to be sure, so I raised a support ticket detailing what I'd found so far and asking if they were doing anything behind the scenes.

Increasing Monitoring

Whilst waiting for my host to respond, I set about making it easier to narrow down the cause.

The first part of the puzzle that was still missing was exactly when changes were occurring, so I changed the monitoring window down to 10 minutes, re-installed a number of the items and waited.

As expected, alarms triggered, so now I could narrow it down to a 10 minute window between 0310 and 0320.

In the meantime, my host had replied to say they hadn't changed anything, and didn't have any ideas either.

Checking Logs again

Now that I had a window of change, I was able to narrow my search, so I went back over the system logs to try and find out what was running. I found the answer in my cron log

03:13:57 868162 run-parts(/etc/cron.daily)[7979]: starting prelink 03:14:14 868162 run-parts(/etc/cron.daily)[8285]: finished prelink

Suddenly it all became clear, but even the obvious needs to be tested. So I ran a check, reinstalled mysql, ran prelink and then triggered a second changed binaries check

# php changed_binaries.php | grep "Alerts"

0 Alerts

# yum reinstall mysql

# /etc/cron.daily/prelink

# php changed_binaries.php | grep -i "Alert"

ALERT: /usr/bin/mysql has changed

ALERT: /usr/bin/mysqlslap has changed

ALERT: /usr/bin/mysqlimport has changed

ALERT: /usr/bin/my_print_defaults has changed

ALERT: /usr/bin/mysqlcheck has changed

ALERT: /usr/bin/mysqlbinlog has changed

ALERT: /usr/bin/mysql_config has changed

ALERT: /usr/bin/mysqladmin has changed

ALERT: /usr/bin/mysql_waitpid has changed

ALERT: /usr/bin/mysqlshow has changed

ALERT: /usr/bin/mysqldump has changed

11 Alerts

A repeat of the test yielded the same results, finally I'd found the cause

Understanding the Cause

Prelink is designed to reduce the performance penalty of dynamically linking libraries, by using the system's dynamic linker to link libraries in advance (i.e. prelinking - it does what it says on the tin!). If a library changes then any programs using that library will need to look that library up again - until it's next prelinked. Prelink rather deliberately preserves the modification time (mtime) of the files it's updating, so that's why I could see no change there.

OpenSSL is a core library and used by a large number of programs. When I updated OpenSSL, I changed the libraries so each of these programs needed prelinking afresh, which resulted in the programs being changed on disk - which in turn triggered the alerts.

Conclusion

Far from being a serious compromise, the alerts were triggered by normal system behaviour, it just took a bit of investigation to put 1 & 1 together and get 2!

Having found the cause, the commonality of OpenSSL between the alerting items feels like it should have stood out more (especially as I'd recently updated OpenSSL), but whilst I had considered the alerts might be as a result of updating OpenSSL, I failed to rationalise how that could be the case (aside from a brief 'Shit, have I accidentally update to a vulnerable version?').

That said, I'd still rather err on the side of caution and rule out the potential of a compromise than to assume causality. Doing anything else would render the alerts pointless, even if it does sometimes take a bit of time to find the root cause.

Had I narrowed the check window earlier, I may have found the cause sooner. I did initially consider it, but was concerned that if an attacker was on the system, such a change might help them become aware of the monitoring, which would, at best, deny me an investigation tool. Once I'd identified that the changes weren't (or didn't appear to be) malicious, I was more willing to take this risk.

I had checked crontab's for all users early on, but forgot about cron.daily otherwise I'd have been reminded of prelink sooner.