A comparative analysis of search terms used on bentasker.co.uk and it's Onion

My site has had search pretty much since it's very inception. However, it is used relatively rarely - most visitors arrive at my site via a search engine, view whatever article they clicked on, perhaps follow related internal links, but otherwise don't feel the need to do manual searches (analysis in the past showed that use of the search function dropped dramatically when article tags were introduced).

But, search does get used. I originally thought it'd be interesting to look at whether searches were being placed for things I could (but don't currently) provide.

Search terms analysis is interesting/beneficial, because they represent things that users are actively looking for. Page views might be accidental (users clicked your result in Google but the result wasn't what they needed), but search terms indicate exactly what they're trying to get you to provide.

As an aside to that though, I thought it be far more interesting to look at what category search terms fall under, and how the distribution across those categories varies depending on whether the search was placed against the Tor onion, or the clearnet site.

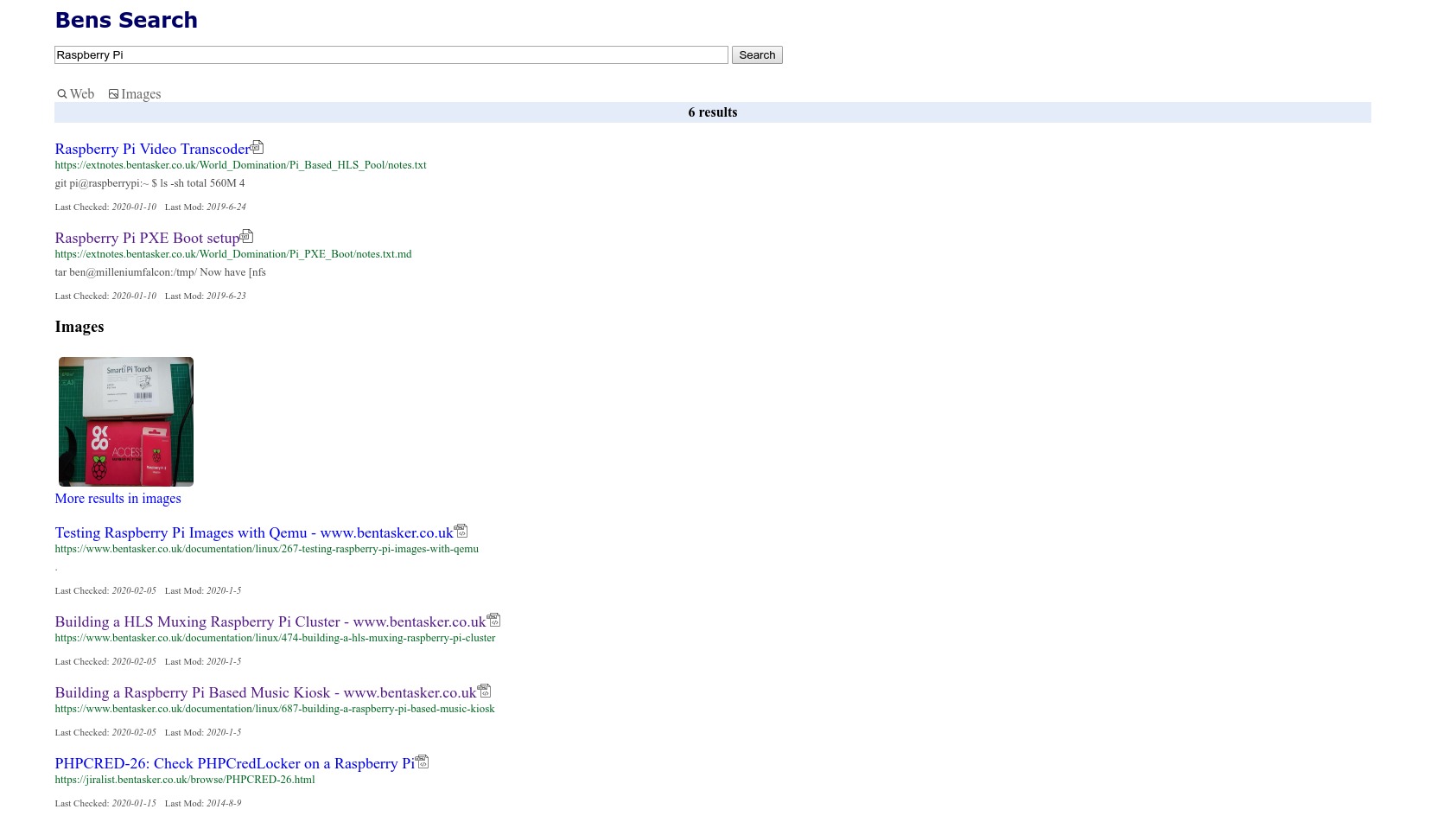

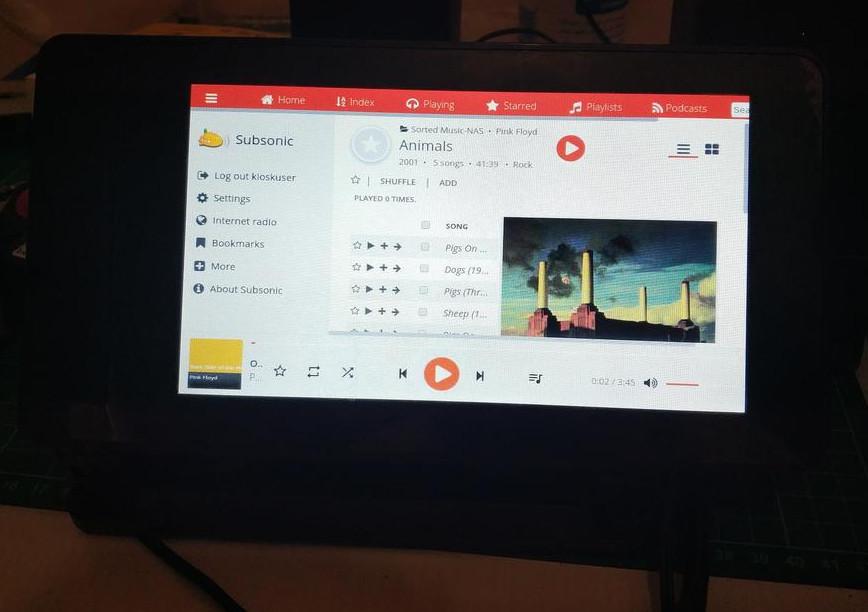

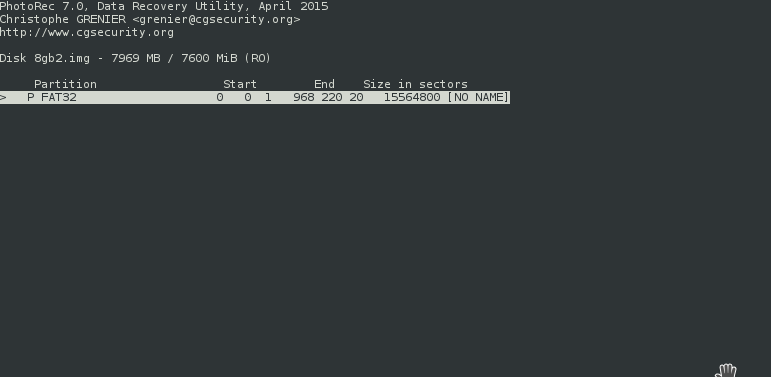

This post details some of those findings, some of which were fairly unexpected (all images are clicky)

If you've unexpectedly found this in my site results, then congratulations, you've probably searched a surprising enough term that I included in this post.