This post was originally posted on Freedom4All, you can view the original in the Freedom4all archive

This post was part of a series called Fallacies and can now be found under the tag Fallacies

Earlier in the series, we looked at claims that the Home Office have credibility when debating drugs and also at claims that Cannabis is a dangerous substance.

In this instalment we will look at the myth that the current political position on medical cannabis is what’s required to protect our children from harm.

The easiest way to highlight the fallacy within the suggestion that it’s all about protecting children is by example, so you’ll have to forgive me whilst I cross national borders with the examples I use. It isn’t that there aren’t sufficient examples in the UK, simply that some examples highlight the issue far better.

Let’s begin with the now-cliche phrase ‘think of the children‘. The phrase shot to popularity after it became the catchphrase of Maud in The Simpsons. Generally, if you hear/read the phrase “won’t somebody please think of the children” the orator/author is sarcastically referring to the stance of another.

The phrase is often used in relation to Daily Mail articles (though not by the readership themselves).

So, hopefully it’s clear in your mind already that crying “think of the children” is something of a running joke. The question is, why? After all, our children do need protecting from a great many things.

The problem is, our children have been used as a political tool far too many times. A favourite trick of politicians is to spin an argument so that it appears to be about protecting children. Talking of a nations young is a very emotive argument, and few will feel comfortable trying to argue against ‘saving’ the youth.

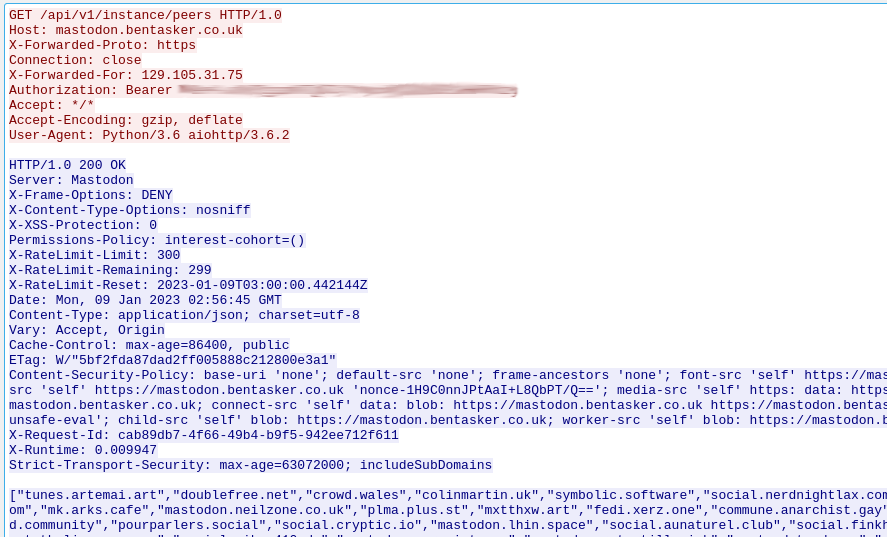

For example: In 2006, the South Dakota Attorney General received a ballot entitled “An act to provide safe access to medical marijuana for certain qualified persons,” and although his duty is to report neutrally on any ballot that he receives, he opted to change the name of the motion to “An Initiative to authorize marijuana use for adults and children with specified medical conditions.”

In a transparent attempt to block the bills passage, the AG used the most emotive argument available – Children. Anyone who has actually read the ballot would understand that the suggested controls are so tight that there is no additional risk of harm to children. Unfortunately, the bill had to pass by someone who wanted to score political points.

Well, for his troubles, AG Larry Long found himself on the receiving end of a lawsuit. You can read more on this here and here.

If you’d like a more local example, cast your mind back a year or so to the UK under Labour’s leadership. Who remembers the creation of the hugely unpopular Enhanced Vetting Scheme? At one point, it seemed to be apparent that anyone who was in regular organised contact with children would need to undergo the new vetting scheme. This included parents who alternated collecting the kids from school with another childs parent.

Although this aggrieved a great many people, including civil liberties groups, we were told it was because of the risk of paedophiles. In fact, based on the publicity it received, you could be forgiven for thinking that every second adult was a paedo’. Once again, the argument used was ‘think of the children‘ and anyone opposing the measures ran the risk of being considered pro-paedophile.

A year on, the measures have been scrapped. Do we feel that our kids are any less safe now? No.

The nation was simply taken in by an emotive argument, albeit one which was bolstered by a lot of media activity.

Although I hate using the war on paedophilia as an example, it is the most recent source of examples. Although I believe our politicians and media have lied, bluffed and invented to meet their own agendas, I’m definitely not pro-paedo. There is a problem, and it needs solving, but as with many other things our politicians just get in their own damn way.

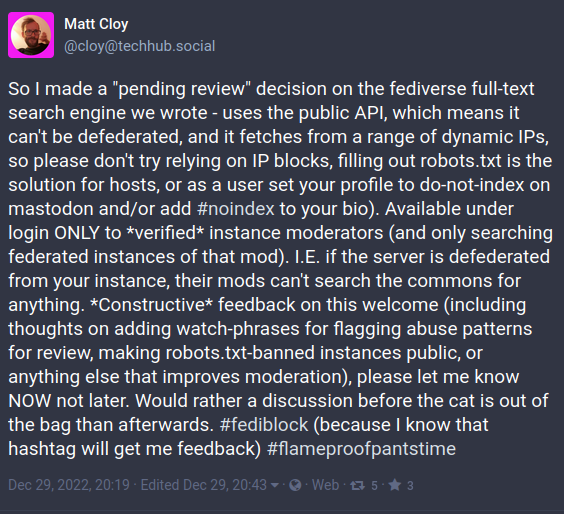

Some may recall the (fairly) recent argument between the head of the Child protection agency CEOP – Jim Gamble – and the social networking site FaceBook.

For those that don’t here’s a quick summary;

Mr Gamble wanted FaceBook to embed CEOP’s ‘Panic Button’ into their website in order to protect minors from grooming. The idea being that if the child suspects that something is wrong, they can hit the ‘Panic Button’ and the case will be referred directly to CEOP.

On the face of it, it’s a sensible way to help protect children. Unfortunately, because Mr Gamble relied on emotive arguments to further his agenda he rather undermined his own position. You see, FaceBook have their own system allowing users to report others, but this wasn’t sufficient for Mr Gamble.

The next thing we knew, the mother of murdered Ashleigh Hall was in the papers complaining about Facebook’s failure to install the Panic Button. Now Andrea Hall has been through something that no parent should ever have to go through, and she has my deepest sympathies, but both she, Mr Gamble and the Papers missed a very important point. A point that everyone else picked up on.

The CEOP Panic button would work like this;

- Minor logs into facebook

- Minor reads/receives something from another user

- Minor feels uncomfortable/suspicious

- Minor clicks CEOP Panic Button.

Now, in Ashleigh Hall’s unfortunate case, steps 3 & 4 would never have happened. She believed she was talking to a 17 year old boy (and didn’t believe anything was wrong) and so would not have clicked the Panic Button.

As horrific as her story is, Andrea Hall was not the right person to use for this agenda. Not only did it undermine Mr Gamble’s argument, but it unnecessarily put Andrea Hall back into the media spotlight. Mr Gamble’s misguided attempt to further his own agenda led to a mother in mourning being forced to undergo public scrutiny once again.

The use of emotive arguments is a very dangerous game, not only can they impede progress but as in Mr Gamble’s case, they can seriously undermine your position. However well intentioned you may appear to be, if it seems that you don’t actually understand the subject matter no-one will be willing to listen to you.

Back to Cannabis

As rich a resource as it may be, I don’t wish to talk any further about the various anti-Paedo attempts in the UK, as the horrific stories involved make me feel physically sick. All I’ll say about it from here-on out is that my deepest commiserations go to anyone affected in any way, whether through abuse or murder, I wish you the very best.

OK, so we’re back on topic.

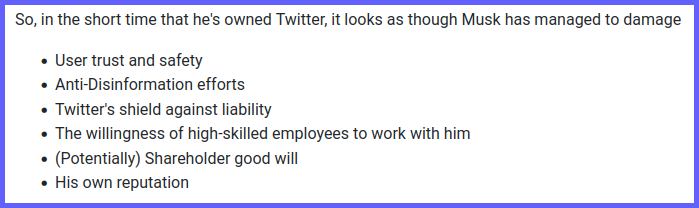

So the Home Office claim that by criminalising anyone using, possessing or cultivating cannabis they are protecting our children. The problem is, that the facts just don’t withstand proper scrutiny.

Alcohol abuse has been quite a concern recently, with various measures implemented in order to protect the children (and of course to some extent, adults). We all know that you require a license to sell alcohol (actually, this is oversimplifying the matter). But are you aware of the draconian steps taken in the name of ‘protecting children’?

Imagine that you work in a licensed establishment (whether a pub, Tesco’s or anywhere else), you may or may not hold a personal license, depending upon your role within the organisation. Now on your average friday, you’ll have at least a few minors try and purchase alcohol.

You’re normally pretty strict, but today you make a mistake. That customer you thought was around 25 was actually 17 (and there are more than a few who look like this), and was part of a trading standards ‘test purchase’. Despite your efforts in the past, you receive an on the spot £80 fine.

If you’re a license holder (probably the Designated Premises Supervisor) you could get a much bigger fine and/or 6 months in prison. Not to mention the loss of earnings if you lose your license.

Why? You didn’t set out to break the law, it was the kid who tried to purchase it from you who did. Do they get punished (even if they’re not part of a ‘test purchase’)? No – In fact, where I live, kids caught with alcohol get a single punishment – they have to watch whilst the copper pours it down the nearest drain. The unwitting supplier however potentially faces a criminal record.

So a law designed to ‘protect the children’ does nothing but criminalise those who make a single mistake. We are all human, we’re not infallible and age is a very subjective thing to guess. In response to the draconian efforts of the law, anyone fortunate enough to look under 25 (or 30 in some places) is required to provide ID.

Make no mistake, it’s not the fault of the retailers, it’s because the UK Government has absolutely no idea how to deal with problems. Yes, there should be a minimum age and yes retailers who regularly sell to minors should lose their license, but the current system just does not work.

Which is exactly the situation we are in with Cannabis Prohibition. The Governments current stance does nothing but criminalise anyone involved with the substance. They don’t care whether you are using it recreationally, as pain relief, to offset the symptoms of chemo or as a method of treating and preventing cancer. If you use it, you are a criminal. If your spouse grows it, you are a criminal.

This cannot continue, Cannabis was used medicinally for over 4000 years and is the only effective medication available to some. It’s certainly far safer than many of the regularly prescribed medications available on the NHS, but the Government continue to bolster their argument by asking us to ‘think of the children‘.

Ask yourself, did you experiment when you were younger? Would you be at all surprised that any teenager experimented? Now here’s some interesting facts, all an issue solely because the Government believes that prohibition is the answer;

Hash/Solid/Soap Bar/Cannabis Resin

Regularly ‘cut’ with other chemicals (including boot polish) to raise weight and thus profit

Weed/Green/Bud/Cannabis Leaves

Regularly sprayed with glass (a carcinogen) in order to raise weight and thus profit

So, if we accept that some teenagers will always experiment, how exactly is the above protecting them? Because the Government tries to enforce strict prohibition, it is left to the black market to supply Cannabis. In order to maximise their profit, they ‘cut’ their product with anything that will increase the weight. The end user gets to pay Cannabis prices for boot polish/glass.

If you truly want to protect your kids, you need to do two things. Educate them about the risks of over-indulgence (which applies to everything) and ensure that there’s a clean supply of anything they might try.

Our Kids are Our Future

It’s often said that kids are our future, and it’s true. So why is it, that we are giving them such a poor start in life? Under the current regime, if a minor is caught in possession of Cannabis, they could end up with a criminal record. Suddenly, they are unable to get a job, and so pursue a life of crime.

Make no mistake about it, this is not the fault of Cannabis. It was the Government’s decision to criminalise anyone using this natural substance, and it is they who have ensured that anyone ‘experimenting’ loses their future.

Conclusion

I hope that, in amongst my ramblings, I have shown that when Government’s claim to be ‘protecting the children‘ it’s usually a sign of either an unsustainable political agenda, or a warning of future incompetence. In the UK we are criminalising our children and destroying their futures all in the name of protecting them.

In doing so, we deprive those who can benefit from medicinal cannabis of a safe and effective medication.

From my point of view, prohibition is doing more harm than good and it is time that it was repealed.

I’ll leave you with an interesting quote. The UK Government has claimed that it’s hands are tied by the UN Single Convention on Narcotic Drugs. However within the pre-amble of the convention is the following;

the medical use of narcotic drugs continues to be indispensable for the relief of pain and suffering and that adequate provision must be made to ensure the availability of narcotic drugs for such purposes

Previous Article: Fallacies: Cannabis is a Truly Terrible, Nasty Drug

Next Article: Cannabis is of no medical benefit.