The Curious Case of BitFi and Secret Persistence

For some slightly obscure reasons I've recently found myself looking at the Bitfi hardware wallet and some of the claims the company make, particularly in relation to whether or not it's actually possible to extract secrets from the device.

The way the device is supposed to work is that, in order to (say) sign a transaction, you use an onscreen keyboard to enter a salt, and a >30 char passphrase.

The device then derives a private key from those two inputs, uses it and then flushes the key, salt and passphrase out.

Each time you want to use the device, you need to re-enter salt and passphrase - the idea being that if it never stores any of your secrets, then there's nothing to extract from a seized/stolen device.

From Bitfi's site we can see this wrapped up in marketing syntax:

The Bitfi does not store your private keys. Ever. Your digital assets are stored on the Blockchains, when you want to make a transaction with your assets (move them, sell them, etc.) you simply enter your 6-character (minimum) SALT and your 30-character (minimum) PASSPHRASE into your Bitfi Legacy device which will then calculate your private key for any given token “on-demand” and then immediately expunge that private key.

For various reasons (see Background) I was somewhat dubious about the veracity of this claim, and ultimately ended up looking over their source code in order to try and verify it.

This post details the results of that examination, the following items should be noted

- Although not explicitly vulnerabilities, the issues noted below have been submitted in advance to the Bitfi dev team (I did ask previously via email whether email or Bitfi.dev was preferable for raising issues).

- Incomplete sources are published on Bitfi.dev - example here, so although I include code snippets in this post, it's updated versions of code that's already public - I'm not simply publishing their code on the net :)

- I probably will make some mistakes: I've been ill, so focusing is hard, and I dislike C# so it's more than possible something's changed without me realising.

- This is the result of a fairly short code review, and in no circumstances should be viewed or characterised as a full audit

- In the sources, code version shows as v112

The result is a long analysis, so some may prefer to jump to the Conclusion.

Background

You may remember Bitfi from last year, when they made the mistake of claiming their wallet was Unhackable and then got shown that it wasn't.

You might even have seen John McAfee throw down a $20m challenge but then refuse to let anyone but a single researcher participate, telling that researcher - Andrew Tierney - "I challenged you. Not them. I singled you out for ridicule, not them".

FWIW, I doubt McAfee is ever likely to offer me $20M, but I wouldn't accept an out-of-the-blue invite to his house either, him being the famously balanced and stable person that he's known to be.

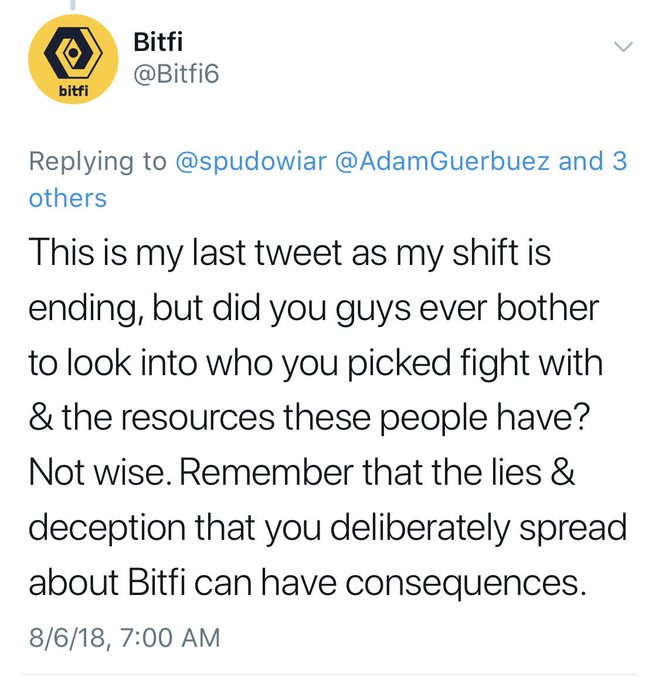

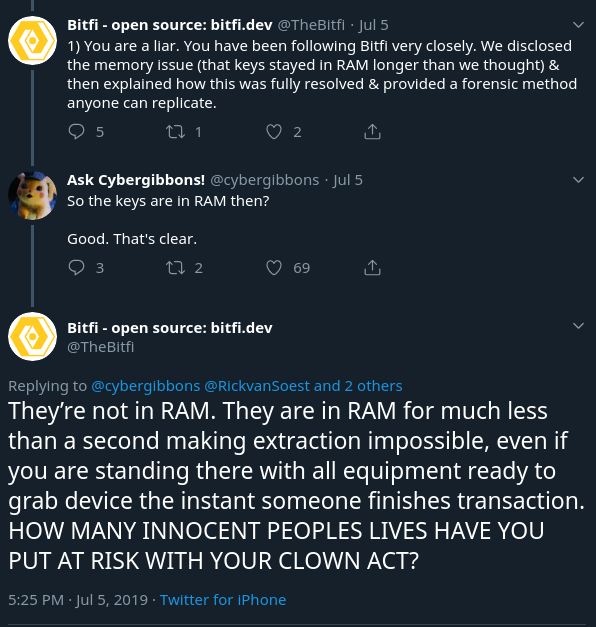

Or, you might have seen Bitfi start threatening the researchers on Twitter:

Suffice to say, there's already quite a lot of previous history and baggage associated with the Bitfi brand.

It's been a weird couple of weeks for me really; I've been properly ill for the first time in over a decade, so have had to slow myself down quite a lot. Although I've still been working, I tried to make sure I got some relative downtime when I had the chance.

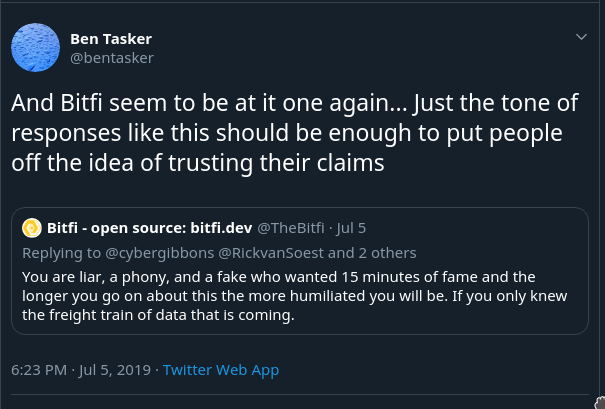

Ultimately, that meant I ended up spending more time than usual on Twitter, and got pulled into the fray after quoting a tweet where they were throwing abuse at a researcher - Andrew Tierney again:

They replied to my tweet saying that you should trust no-one, and users should verify for themselves, so I pointed out they'd literally just been quoted getting shouty at someone who had tried to verify their claims.

In response they directed me to Bitfi.dev, which at the time 404'd on port 80 rather than redirecting users to HTTPS. Great start...

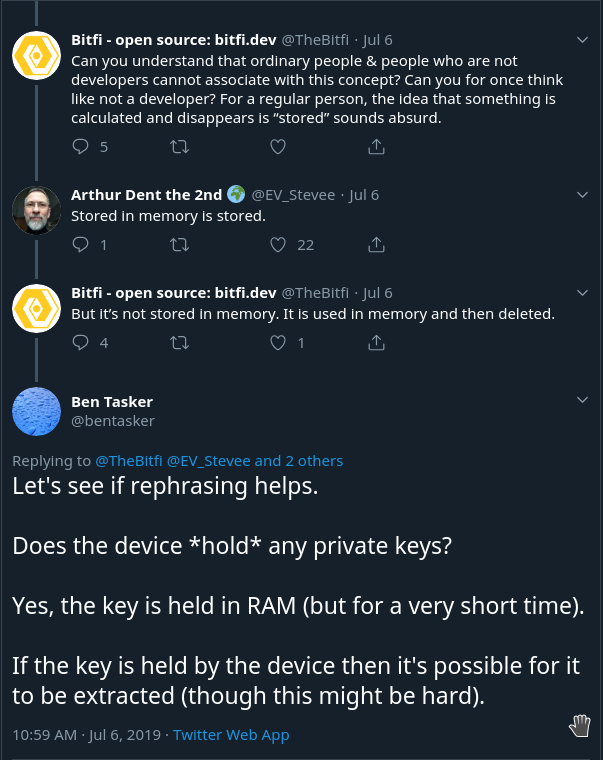

To cut a long story short, the company (and particularly one of their founders - Daniel) were extremely combatative in Twitter threads, and amongst making threatening statements (see this thread) and an attempt to gaslight me, also seemed determined to die on the hill of semantics

It was repeatedly claimed that they'd fully fixed the previous issues with secrets persisting in RAM, and that it is impossible for anyone to extract secrets from the device (although they did later accept that getting malware onto a user's device would be a viable angle).

Drama does have a habit of pulling you in, and all of this served, to pull me deeper into the fold, turning me from a slightly disinterested commenter to someone interested in looking verifying some of their claims.

The threads on Twitter were pretty long and sprawling, so I'll stop here and move on.

Despite the two Bitfi associated accounts frequently referring myself and others over to Bitfi.dev, it took quite some time to actually get any of our keys approved so that we could actually start looking at things, but eventually we were given access to the full source, rather than the incomplete version publicly published.

Given that others were already going to be running and attacking an emulated device (as well as loading the software onto hardware of their own), I figured I could spend a little bit of time performing a simple code review - particularly as the ability to easily stop/start aligned well with my needs.

The Claims

Although happy to pick up on anything else I spotted at the same time, the primary aim was to verify the claims below. Each has been made multiple times in multiple places, so I've just linked to the single claim (as there's now a lot to search through).

- After you complete a transaction on a (genuine) Bitfi device with no malware, someone seizing it will have no means whatsoever to extract private keys. Claimed on Twitter as well as on the site.

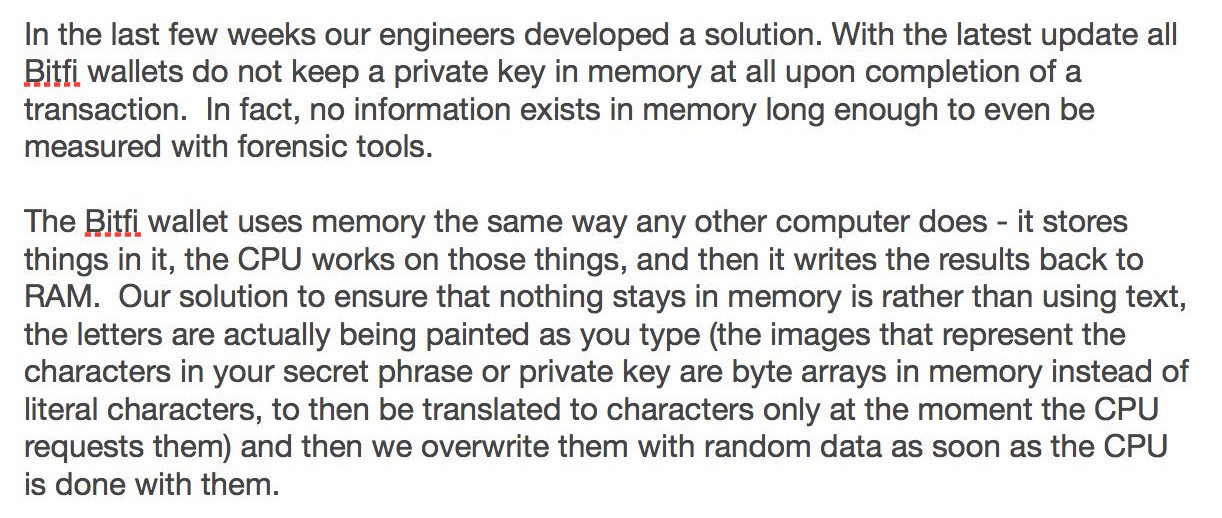

- That secrets are securely overwritten by the application (despite being a userland application written in a language - C#/Mono - with a garbage collector)

- Input characters are painted/drawn in RAM rather than being literal characters so that nothing stays in memory (original claim here, copy here).

The last of those being as much curiosity about the implementation as anything.

The result, inevitably, was a file of long sprawling notes as I read through the code. The content below is written in a (hopefully) more intuitive order - for what it's worth, because of my interest in (3) above, I actually started in GetExtKey() and worked back.

Initial Assumptions

I decided that, to keep things simple, I'd initially assume that secure overwrites were effective, so that I wasn't polluting each section of my notes with the phrase "if an overwrite actually works". If nothing else, the effectiveness of any overwrite will depend on the types it's being used against.

What you do need to know though, is that the secure erase methods are:

Sclear.EraseIntegers()Sclear.EraseBytes()

There is a third method - Sclear.Clear() - which is simply a wrapper for .NET's Array.Clear().

SecretActivity

The class SecretActivity within the codebase defines a whole bunch of event handlers.

It has a couple of attributes we're interested in:

knoxsecrknoxsalt

Both of which are integer arrays. As a user presses buttons on the keyboard, mapping integers (but not ascii char codes) relating to that character are pushed into the relevant array.

Although some de-obfuscation is required, we can consider these arrays as being extremely sensitive - they allow us to discern the (only) two bits of user input which are used to derive the private key.

So, we'd expect to see that these two variables are - consistently - securely erase once no longer required.

bw_DoWork()

The method bw_DoWork() is one of the event handlers within SecretActivity and as the name suggests, appears to be one of the main workhorses.

As such, we see both knoxsecr and knoxsalt quite a lot, but unfortunately they're not always handled properly:

using (NoxKeys.NoxKeyGen keys = new NoxKeyGen())

{

string b64 = keys.GetTestHash(knoxsalt, knoxsecr);

if (string.IsNullOrEmpty(TryAddressHash)) //instruct to repeat info

{

TryAddressHash = b64;

b64 = "0";

e.Result = 1001;

Sclear.Clear(knoxsalt);

Sclear.Clear(knoxsecr);

return;

}

If TryAddressHash is null/empty (as it is by default), this section will return without securely overwriting either of these two important arrays.

From a review of the codebase, we can see that this conditional fires regularly - it's a mechanism used to push a verification dialog to the user, and in the else of this conditional we can see what happens if the user's verification does not match

if (b64 != TryAddressHash || string.IsNullOrEmpty(b64)) //repeat or cancel dialog if no match

{

b64 = "0";

e.Result = 8;

return;

}

If a user's second input differed from the first, we'll return without running so much as a Clear() (much less an overwrite) on our secrets generated from the second input.

That's extremely problematic if the users second input was the valid one and it was the first (in theory, cleared) input that contained the error.

Assuming we don't enter that conditional block, we move onto where the work really begins

Sclear.LastHashAtp = TryAddressHash;

AdrCollection collection = keys.GetNewWalletCollection(knoxsalt, knoxsecr);

TryAddressHash = "0";

string btcadr = collection.BTC;

string ltcadr = collection.LTC;

string ethadr = collection.ETH;

string[] moneroadr = collection.XMR;

string neoaddress = collection.NEO;

With the benefit of hindsight, we know that it's only the first 2 lines which we need to care about here

-

Sclear.LastHashAtphas a hash written to it -

NoxKeyGen.GetNewWalletCollection()is called (and passed salt and secret)

The reason we care about either of these will become clear later

Other Treatment of knoxsalt and knoxsecr within SecretActivity

For the purposes of brevity, I won't quote code snippets for each of these. However, the following are all locations where knoxsalt & knoxsecr are written to in some way within SecretActivity.

"Overwritten" means they were passed into Sclear.EraseIntegers(), and redefined means knoxsalt = new int[0]; knoxsecr = new int[0];

- For unknown reasons, within method

OnCreatethey are set to null, and then redefined a few lines later - In

vbw_RunWorkerCompletedthey are overwritten. This method does not appear to fire afterbw_DoWork() - Within

ProcessMsgTask()both are overwritten - Within

OnBackPressed()they are overwritten. This method appears to fire when the user presses back to exit the app - Within

NoxDispose()they are both overwritten - Within

ibw_runWorkerCompleted()they are redefined but not overwritten - Within

gbw_runWorkerCompleted()they are redefined but not overwritten - Within

abw_RunWorkerCompleted()they are are overwritten. This method does not fire afterbw_DoWork() - Within

tbw_RunWorkerCompleted()they are redefined -

Within

bw_RunWorkerCompleted()(which does run afterbw_DoWork()) they are redefined and not overwritten

So, we can see there are multiple instances within the class where these secrets are redefined rather than being securely overwritten.

The result of simply redefining is that the old contents will sit on the heap until the Garbage Collector deals with them - which may prove to be an unacceptably long period of time.

SecretActivity Issues

So, to summarise the issues we've seen so far within the SecretActivity class:

- Secrets are not securely erased/overwritten from the users first input

- Secrets are not erased at all if the user's second input does not match the first

- In multiple places within the class, secrets are discarded onto the heap without an overwrite

The result is that (already) we have a lot of secrets being left on the heap, with the one mitigation being that the values are lightly obfuscated rather than being literal characters or ascii char codes.

In the case of bw_DoWork() it seems that not overwriting the secrets was a deliberate choice.

The rationale being that complexity/risk needed to be reduced within this method as it's where addresses are created - the concern being that making an error and creating an unspendable address would be bad (not an unreasonable point). As GetExtKey() performs some level of overwrite, it was felt it was safe/best to leave the overwrite calls out.

I can see the rationale in this, although I'd argue the consequences of losing control of secrets are greater, it's ultimately a case of balancing risk, and the dev who wrote bw_DoWork() felt it better to err on the side of caution.

NoxKeyGen

The NoxKeyGen class, as the name suggests, handles the key generation implementation - taking user's salt/passphrase, de-obfuscating the input and deriving a master key from it (before then deriving per-currency keys from that).

GetNewWalletCollection()

We saw this method being called above. It performs a few fairly simple steps, but the impact of them is fairly important.

First it calls NoxKeyGen.GetExtKey() in order to derive the master key from salt and password (more on that below), then it calls a number of currency specific functions, passing those the master key

ExtKey masterKey = GetExtKey(SecretSalt, SecretPW);

adrCollection.BTC = GetFirstBTC(masterKey);

adrCollection.LTC = GetFirstLTC(masterKey);

adrCollection.ETH = GetFirstETH(masterKey);

adrCollection.NEO = GetFirstNEO(masterKey);

adrCollection.XMR = GetFirstXMR(masterKey);

As a function it's fairly innocuous, but there's hidden trouble in some of the functions it calls

GetFirstBTC()

The method GetFirstBTC() is almost identical to the counterparts listed above, the primary difference being in the 1st and 5th lines (where the currency name is passed in).

ExtKey masterKeyA = masterKey.Derive(GetCurrencyIndex("btc"), hardened: true);

ExtKey key = masterKeyA.Derive((uint)0);

byte[] keybytes = key.PrivateKey.ToBytes();

Key pkey = new Key(keybytes, -1, false);

var resp = pkey.PubKey.GetAddress(GetBLKNetworkAlt("btc")).ToString();

Sclear.EraseBytes(keybytes);

masterKeyA = null;

key = null;

pkey = null;

return resp;

It's not really clear why there isn't just a single function which takes the currency as an argument, but that's not a security issue...

The chain of events here is fairly important though

- Derive

masterKeyAfromMasterKeyfor whatever currency we're using (in this case BTC) - Perform another derivation, then dump the private key bytes into a byte array

keybytes - Generate a new instance of (3rd party) object Key passing in

keybytes, assign it to variablepkey - Use

pkeyto access the public key and get the address for whatever currency (in this case BTC). Assign toresp - Overwrite the byte array

keybytesusingSclear.EraseBytes() - Redefine

masterKeyAandpkeyto null. Do not overwrite them - Return

resp

So in step 3 a new object instance of type Key was created and passed the byte array keybytes.

However, once this object was no longer required the reference to it was simply removed (by setting pkey to null). So, that object is now sat, with it's key material, dereferenced on the heap, waiting for the Garbage Collector.

The same applies to the key generated in Step 2 (this time type ExtKey) - the source of the data written into the byte array keybytes in the first place.

Class ExtKey uses ISecret (so implementing IDisposable) but class Key does not.

GetExtKey()

GetExtKey() is actually the first function I looked at as it's one of the functions that implements the character 'painting' (claim 3) I was interested in looking closer at.

The method accepts the Salt and Password as int arrays (basically references to SecretActivity's knoxsecr and knoxsalt).

It then de-obfuscates them by iterating over and translating the mapping integer into the byte value of the relevant character

byte[] ssalt = new byte[SecretSalt.Length];

for (int i = 0; i < SecretSalt.Length; i++)

{

ssalt[i] = Sclear.SCharImage(SecretSalt[i]).SBYTE;

SecretSalt[i] = -1;

}

Each byte of SecretSalt is then nulled.

As sensitive as we might have considered knoxsecr/knoxsalt, ssalt and spw are all the more sensitive because they contain ASCII bytes representing the user's input.

Once the de-obfuscation has been performed, the salt and password are passed into SCrypt in order to derive a key

byte[] der = NBitcoin.Crypto.SCrypt.ComputeDerivedKey(spw, ssalt, 32768, 8, 4, 4, 64);

We then reach a conditional

if (!string.IsNullOrEmpty(Sclear.LastHashAtp))

This is why I noted above that LastHashAtp had been written to. If we've reached GetExtKey() via bw_DoWork() then LastHashAtp should not be null.

However, if we've reached via another code path then it will be (it only gets updated in bw_DoWork()), causing a (minor) issue

using (var Tshacrypt = new System.Security.Cryptography.SHA256Managed())

{

byte[] Thash = Tshacrypt.ComputeHash(der);

string tval = Convert.ToBase64String(Thash);

if (tval != Sclear.LastHashAtp)

{

throw new Exception("Information does not match.");

}

Sclear.LastHashAtp = null;

}

Within this block, we generate a SHA256 of the derived key (der) and store that in a byte array. However, we then base64 encode it and store it in an immutable type - a string.

That string may well persist in memory for quite some time, and is a direct hash of our derived key.

The harm from this is (probably) relatively minor, but it seems clumsy, especially given claims on Twitter that this thing should be safe against Nation States (who, if anyone, have the resources to try and bruteforce a hash).

Finally, as the function wraps up we create a master key from the derived key and a hash of the salt before returning

using (var shacrypt = new System.Security.Cryptography.SHA256Managed())

{

byte[] hash = shacrypt.ComputeHash(ssalt);

ExtKey masterKey = new ExtKey(der, hash);

Sclear.Clear(SecretSalt);

Sclear.Clear(SecretPW);

Sclear.EraseBytes(ssalt);

Sclear.EraseBytes(spw);

Sclear.EraseBytes(der);

Sclear.Clear(hash);

return masterKey;

}

However, you might note that although we've called secure overwrite for ssalt, spw and der, we've only used Clear() on SecretSalt, SecretPW and hash...

So, once again, we've seen the codebase's inconsistency with whether Salt and password get securely overwritten (and we already know that when called from bw_DoWork() they're not subsequently overwritten).

NoxKeyGen Issues

There are a number of instances within the class where secrets and keymatter are left to rot on the heap rather than being overwritten

-

GetFirstBTC()and it's analogies for other currencies leave two different key instances (containing raw key bytes) on the heap. Thekeybytespotentially exposed are those of the private key calculated for that currency. -

GetExtKey()can, under some circumstances, leak a hash of the derived key into an immutable type -

GetExtKey()fails to securely overwrite SecretSalt and SecretPW when returning

Sclear

The class Sclear is where both the image based obfuscation, and the secure erasure are performed.

I'll address the use of images later, as it probably warrants it's own section, but it's time to look at how the erasure is actually implemented.

Due to the similarity between these methods, I'll offer them without comment and then talk about the efficacy of the overwrites below.

EraseBytes()

The method EraseBytes() is passed (unsurprisingly) a byte array. It's a fairly simple function

public static void EraseBytes(byte[] keybytes)

{

if (keybytes == null) return;

for (int i = 0; i < keybytes.Length; i++)

{

keybytes[i] = 48;

}

Clear(keybytes);

}

So, what we can see is

- The code iterates over the input array once

- It writes value 48 (an ASCII 0) into each position

- It then calls Clear()

EraseIntegers()

The function EraseIntegers() accepts an argument of type int[]. It otherwise functions more or less the same way as EraseBytes():

public static void EraseIntegers(int[] array)

{

if (array == null) return;

for (int i = 0; i < array.Length; i++)

{

array[i] = -1;

}

Clear(array);

array = null;

}

Clear()

The method Clear() accepts an array, and then calls C#'s Array.Clear() on it

public static void Clear(Array array)

{

if (array != null)

{

Array.Clear(array, 0, array.Length);

}

}

Efficacy of Overwrites

This is an important section as it directly addresses Claim 2:

That secrets are securely overwritten by the application

So, at this point, there are a few things we know about the actual usage of EraseBytes() and EraseIntegers(), including that it's something called against byte arrays which directly contain key matter (rather than simply being references to the image obfuscation).

The Bitfi app is written in C# and runs in Mono for Android - the important facet of this fact is that there's a garbage collector (GC).

One of the things that the GC sometimes does is move objects around in the heap, so when you write into an array there's no implicit guarantee that you'll be writing into the same memory location that was used when the array was first written into.

It may, instead be, a new location the GC has copied the data too. As a result, the original copy may still persist in RAM - it's even possible that more than one copy will exist.

It is possible to defend against this - to some extent - by pinning the relevant byte arrays. However, this has not been done.

The issues inherent with using byte-arrays for particularly sensitive data are one of the reasons that the SecureString class was created.

Before SecureString was conceived though, techniques such as those shown above were used, but it was common practice to perform multiple overwrites (many people considered 5 iterations to be the minimum, with each alternate iteration writing 1s instead of 0s). Although that approach is, itself flawed (you could just as easily find you write to 5 different memory locations), the Bitfi implementation does not currently meet even that humble standard.

Although not commonly used on mobile devices like the one Bitfi is based upon, it's worth noting here that there are also concerns if swap is enabled as that "erased" data may persist on disk for extremely long periods of time.

So, to conclude - it's extremely likely that there will be times when the attempt at secure overwrite is wholly ineffective, leaving the user's secrets sat in RAM for an unknown period of time.

Image Painting

Finally, we come onto the bit I was most curious about. The idea that rather than using text, letters you type are being drawn, and images are used in memory instead of characters.

This was apparently implemented last year after the successful compromise and key extraction of their Unhackable device.

The Concept

There are a handful of snippets you'll need to be aware of to help with explaining how this all hangs together, but the basic concept is that there is an array in memory. Each element within that array contains a struct containing an image and it's associated data, using the following attributes

- A mapping integer (known as

POS) - A decimal char code (

DEC) - A Bitmap object (

BITMAP) - An ASCII byte (

SBYTE) - An ASCII Character (

SCHAR)

These structs are defined as follows

internal readonly struct RCharImages

{

internal readonly int POS;

internal readonly int DEC;

internal readonly Bitmap BITMAP;

internal readonly byte SBYTE;

internal readonly char SCHAR;

internal RCharImages(int _pos, int _dec, Bitmap _bitmap, byte _sbyte, char _schar)

{

POS = _pos;

DEC = _dec;

BITMAP = _bitmap;

SBYTE = _sbyte;

SCHAR = _schar;

}

}

Each supported character on the keyboard has it's own element within the array, and these are built one by one by code like the following

private static RCharImages[] ASet()

{

List CharImageList = new List();

CharImages item;

item = new CharImages(); item.DEC = 97; //item.CHAR = "a";

item.B64 = "iVBORw0KGgoAAAANSUhEUgAAAAcAAAAMCAYAAACulacQAAAAf0lEQVQoU7XQMQ4BUQCE4e9p9wA6tWKPsQlxAMdwBGuLrVQqF1ApFUKiUOu2cwitSsKGJ0HEdqb9M/knEzQk/AuW2i726D4VJy2ZserbOTFHR2IYYW4kmL2NW0dY6LnZCPpyWx/NCBcPzz1XOxxicypxtsQAR6yQvpw/nmh8qAZyxRj7W2dbdwAAAABJRU5ErkJggg=="; item.POS = 0; CharImageList.Add(item);

Yes, that is one extremely long line with multiple statements on it.

Type CharImages is a simple class with 3 attributes, so I'll not include the structure here as it's only a temporary creation anyway.

The base64 string is literally a PNG of the associated character:

Each character has it's own set of lines like those above. Once they're defined, the List is iterated over:

List RCharImageList = new List();

foreach (var imgv in CharImageList)

{

byte[] BTS = Convert.FromBase64String(imgv.B64);

using (Bitmap bitmap = BitmapFactory.DecodeByteArray(BTS, 0, BTS.Length))

{

Bitmap bitmap2 = Bitmap.CreateScaledBitmap(bitmap, bitmap.Width * 2, bitmap.Height * 2, true);

RCharImages rChar = new RCharImages(imgv.POS, imgv.DEC, bitmap2, Convert.ToByte(imgv.DEC), Convert.ToChar(imgv.DEC));

RCharImageList.Add(rChar);

}

}

return RCharImageList.ToArray();

What's happening here is :

- the PNG is being base64 decoded then turned into a bitmap object, and doubled in resolution.

- A struct of type

RCharImages(the one we discussed above) is created for each letter - The list is ultimately converted to an array, which becomes

Sclear.SCharImage

Lookups/Mappings

What you get is an array where you look up values using the mapping integer, so taking our original definition snippet above Sclear.SCharImage[0]'s attributes would have the values:

-

.POS: 0 -

.DEC: 97 -

.BITMAP: <Bitmap Object> -

.SBYTE: 0x61 -

.SCHAR: a

So when mapping integers are observed elsewhere, what they're referencing is attribute POS.

Usage - Processing

When Sclear.SCharImage is used in the context of deriving keys, the usage is always to look up using the mapping integer and then extract attribute SBYTE

ssalt[i] = Sclear.SCharImage(SecretSalt[i]).SBYTE;

At the various points where this is done, the result is that there will be a byte array containing ASCII bytes in memory, so the code tries to only call this just before the key is needed (concerns about erasure not withstanding).

Usage - Output

There are of course two ends to this process - it's all very well having a mechanism to de-obfuscate the mappings, but how is the obfuscation implemented, and which of the other attributes (if any) are used?

To understand this, we need to look a little at usage of Sclear.SCharImage with the class SecretActivity.

We see it used (unsurprisingly) when creating the buttons on the keyboard, under various conditional paths

The mapping integer is written into each button's Tag attribute (manually), with some conditionals driving variations in the Tag used:

if (capson)

{

mButtonA.Tag = 26; mButtonB.Tag = 27; mButtonC.Tag = 28; mButtonD.Tag = 29; mButtonE.Tag = 30; mButtonF.Tag = 31; mButtonG.Tag = 32; mButtonH.Tag = 33; mButtonI.Tag = 34; mButtonJ.Tag = 35; mButtonK.Tag = 36; mButtonL.Tag = 37; mButtonM.Tag = 38; mButtonN.Tag = 39; mButtonO.Tag = 40; mButtonP.Tag = 41; mButtonQ.Tag = 42; mButtonR.Tag = 43; mButtonS.Tag = 44; mButtonT.Tag = 45; mButtonU.Tag = 46; mButtonV.Tag = 47; mButtonW.Tag = 48; mButtonX.Tag = 49; mButtonY.Tag = 50; mButtonZ.Tag = 51;

}

Yes, again, that's one long line with multiple statements

We then see the tags later being used to look up the mapping and set the button to have the appropriate character as it's text

foreach (Button b in buttonLIst)

{

b.SetText(new char[] { Sclear.SCharImage((int)b.Tag).SCHAR }, 0, 1);

}

So here, the button is being defined to display the relevant character.

What this means, is that we now have, in memory a set of elements which contain both the literal character and the mapping ID. So, it's a point at which the mapping could be discovered without access to the source (though the mapping is publically published anyway).

An event is created so that when a button is pressed, KnoxAdd() is called

KnoxAdd((int)mButtonSpace.Tag);

KnoxAdd() itself is fairly simple:

It triggers haptic feedback and then passes the mapping integer into NXArray_Add(). The job of this function is simply to extend the salt/passphrase array (whichever's being edited) and push the new mapping integer into it.

private void NXArray_Add(int addval, int[] nxlist, out int[] updated)

{

updated = new int[nxlist.Length + 1];

for (int i = 0; i < nxlist.Length; i++)

{

updated[i] = nxlist[i];

nxlist[i] = -1;

}

updated[nxlist.Length] = addval;

Array.Clear(nxlist, 0, nxlist.Length);

nxlist = null;

}

The SClear erase functions aren't called against nxlist here, despite the fact it contains the salt entered so far, instead there's what feels like a slightly half-hearted reimplementation (setting each element to -1).

The result being that this function may very well leak the mapping onto the heap each time a key is pressed (although I wouldn't expect it to be every time).

Having received it's updated int[] back, KnoxAdd() then calls KnoxUpdateView() which uses the BITMAP attribute to display the pressed character on screen - avoiding pushing a string into the display layer.

Assessment

The use of the image mapping mechanism is... unusual. It certainly seems inefficient to have the application convert from PNG to Bitmap and scale up on each run, but that's not overly relevant here.

It adds a lot of complexity, and appears to be trying to achieve 2 aims:

- Preventing strings containing the salt/passphrase being passed through to the display layer

- Protecting the secrets in memory until they're needed

As odd a mechanism as it is, it does seem to achieve the first.

The second, however, really is a bit more nuanced.

It's true that, with a heap inspector, I'm unlikely to see a byte array of the format

[m,y,s,e,c,r,e,t]

(Ok, you wouldn't anyway, as that's not quite how they're stored)

But what I need to look for instead is an integer array with values

[12,24,18,4,2,17,4,19]

The value of POS for any given mapping can be discovered in a number of places (including the public internet), so anyone developing malware targeted at Bitfi shouldn't encounter many issues here.

If the concern is discovery following seizure, so long as the values persist in memory (and they certainly do at times), a good examiner will find their way through the mapping. It is at best, an extremely minor barrier, and the complexity of the implementation has led to potential sources of leakage like that in NXArray_Add().

It's a minor point, but if we look at what was originally said by Bitfi, it's no longer true/accurate (if it ever was):

(Uncropped version here for those who want full context)

We've seen in Sclear.EraseBytes() and Sclear.EraseIntegers() that random data is not used, instead each element in the relevant array is set to an ASCII zero (0x030).

So, although it was interesting to look over, as an implementation I'm not sure it adds much that couldn't have been handled with a much less complex mechanism:

- As now, pushing images instead of strings to the activity layout (preventing loss of control of those strings)

- Maintaining a simple char to char mapping for retrieving the images (i.e. key 'a' submits 'a' or the ascii char code, and the 'a' bitmap is retrieved from the relevant struct)

In effect, removing the need for all calls like that seen in Usage - Processing and then removing/simplifying all but the call to KnoxUpdateView() in Usage - Output.

That way, there's no requirement for byte arrays such as ssalt (in GetExtKey()) to even be created. The current model is unnecessarily increasing the number of sensitive byte arrays that might exist in memory (i.e. instead of just SecretSalt you now have to make sure ssalt is sufficiently protected too).

Conclusion

Finally we get onto the conclusion (and if you've skipped to this point, you've missed sooo much!).

So, I was looking at 3 specific claims that Bitfi have made:

- After you complete a transaction on a (genuine) Bitfi device with no malware, someone seizing it will have no means whatsoever to extract private keys. Claimed on Twitter (see here) as well as on the site.

- That secrets are securely overwritten by the application (despite being a userland application written in a language - Mono - with a garbage collector)

- Input characters are painted/drawn in RAM rather than being literal characters so that nothing stays in memory

Claim 1 is in some ways, very heavily dependant on Claim 2 - if the secure overwrite/erase does not function as intended then you're going to be left with secrets in RAM,which will be available for someone to seize. It's worth noting here that some of the timescales Bitfi use are particularly optimistic:

However, with Bitfi we can enter an ordinary passphrase right in front of you (while standing inside such a lab), give it to them that instant, and extraction will not be possible.

(he's referring to a forensic lab there).

So, as well as the claim that a seized device cannot have keys extracted from it, he's saying he could stand in a pre-prepared lab, enter the passphrase, hand the device over and that lab would not be able to extract the key matter.

As I said to him in response, that's a strong, strong claim to make, and one that I believe is contradicted by the evidence laid out here.

From a simple code review we can see that there are a lot of opportunities for secrets to persist in RAM, arising from code paths where variables containing those secrets are not passed for secure erase:

- In

SecretActivity: Secrets are not securely erased/overwritten from the users first input - In

SecretActivity: Secrets are not erased at all if the user's second input does not match the first - In multiple places within

SecretActivity, secrets are discarded onto the heap without an overwrite - In

NoxKeyGen:GetFirstBTC()and it's analogs leave two different key instances (containing raw key bytes) on the heap. The key bytes potentially exposed are those of the private key calculated for that currency. -

GetExtKey()can, under some circumstances, leak a hash of the derived key into an immutable type -

GetExtKey()fails to securely overwriteSecretSaltandSecretPWwhen returning

It's also extremely questionable whether those secure erase methods are even actually effective, so there's a very strong possibility that material may still leak onto the heap even where developers have not failed to call those methods.

The Image mapping mechanism achieves one of it's aims, but (IMO) fails to effectively achieve it's second, and at the cost of introducing complexity which has led to secrets being leaked onto the heap. The additional complexity of the current model has also doubled the number of secret byte arrays that the code must manage, increasing exposure to the risk of something not being correctly erased.

At best the image mapping raises the bar a little, but certainly not beyond the level of most labs, and certainly not a State Actor.

Taking the claim precisely as written though, I think it's fair to conclude it's failed - through the introduction of complexity, it's accidentally caused data to persist in memory rather than preventing it.

Now, all of this is based upon a reading of the code, and is - to a certain extent - therefore theoretical. Perhaps there's something or some protection that I've somehow missed?

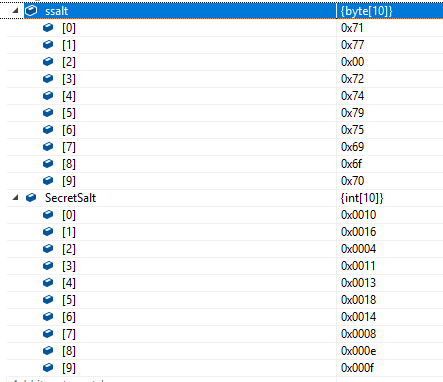

Well, last night, one of the researchers involved in the cracking of the unhackable claim, and therefore the object of Daniel's ire - Andrew Tierny AKA Cybergibbons sent me the result of a memory inspection

So we can see that both ssalt and SecretSalt are available for the taking.

ssalt is the de-obfuscated form of SecretSalt generated within NoxKeyGen().

As there's code duplication, it's hard to say for sure which method it'll have come from, but:

- Those that do use

ssalttend to callSClear.EraseBytes()on it, suggesting that's not wholly effective - Given the null (

0x00) in position 3 it's possible this is a partial copy created by the GC - if that were the result ofSClear.EraseBytes()it should have value0x30and there doesn't seem to be anywhere in the source where any part ofssaltis nulled. - There are code paths out of those functions that do not call the erasure mechanisms - generally when throwing an exception, so it's persistence could relate to that

As a final exercise lets recover the salt from those values. We know that ssalt contains ASCII char codes, and use the known mapping to use SecretSalt to verify the result

ssalt |

SecretSalt |

|---|---|

|

|

So, we can see (and have verified) that the salt was almost certainly the string qwertyuiop.

So, Claim 1 is (IMO) disproven both at a practical and a theoretical level.

It's about how things are approached

Some of the mistakes detailed in this post are understandable, it's a complex code base and the product is relatively immature. But, there are some issues inherent in the very design of Bitfi's device:

- They're handling key matter (sometimes poorly) in a language with a Garbage Collector

- The device is (in effect) a mobile phone running Android with their codebase running within Mono for Android. As a result, the attack surface is absolutely huge compared to something running a less general purpose OS

That's not to say that some of the issues couldn't be fixed, but given the company (and particularly it's visible leadership's) extremely combatative stance on Social Media, it's hard to believe that the issues will be accepted and properly addressed.

However, my conversations with their CTO - Michael - have been very productive (others have found that too).

He's resolved some issues in other areas of the company's infra (that their social media team had rejected as even being issues), as well as displaying a commitment to fixing issues raised.

Their developer messages refer to the preloader having been replaced in order to implement best practice, as well as an intention to allow end users to reflash their devices with a trusted firmware (I presume the instructions for that would include details on how to verify that firmware first). There was no sign of this in the sources I was given, but that doesn't mean it doesn't exist.

So, despite the company's questionable approach on social media, it does look like there's a will within the company to recognise and address issues with the product.

I do wonder if the much vaunted Defcon appearance (and claimed subsequent release of information from forensic testing) is going to be with so far unannounced new devices/models. New models with various important issues fixed (like reducing vulnerability to cold boot attacks), so that it's harder to extract the information from memory in the first place.

A cynic might even suggest that the current round of SM drama might have been started to create hype and increase focus on the company at Defcon, so that they can extract maximum publicity from a launch.

Outside Verification

One thing I am now extremely dubious about is the claim that the device has been verified, much less checked "with a magnifying glass", by the developers behind the various blockchains that have Bitfi as their official hardware wallets.

I find it extremely hard to believe that the developers of Apollo, Digibyte, Groestlcoin and Luxcoin could all have looked and missed so much potential for secret leakage.

So either the claim is false, or those currencies should probably be treated with a significant amount of suspicion too. My (somewhat skeptical) view is that they were probably sold on the marketing claims and made the decision to adopt Bitfi based on a simple usability test - others more cynical than I have suggested that having McAfee talk about their currencies might also have been a driver.

The Business Cost

For me, the underlying damage in all this though, is to trust.

No hardware wallet is ever going to be perfect, particularly one as immature as BitFi's product, but I need to be able to trust that when a wallet vendor says something it's true (even in a trustless marketplace there needs to be some trust) especially when talking about security issues with the product.

That just doesn't seem to be the case with Bitfi, and for me that's without doubt the most damaging part of this entire post.

My Interests

I shouldn't need to write this last section really, but having seen the conduct of the two BitFi related accounts on Twitter it seems better to answer some questions in advance:

- Yes, I do hold/own some Bitcoin

- No, I do not own a Bitfi

- No, I do not own a Trezor, Ledger or other hardware wallet

- No, I'm not going to disclose how I store those Bitcoin as it's irrelevant

- Yes, I'm aware of other serious attacks on other devices, such as this recent claimed Trezor issue. I've also read enough of the post to have noted that it's entirely mitigated by using a strong passphrase, so using a phrase even half the length of that required by a Bitfi prevents this physical attack. More importantly, though, Trezor's response to this is reasonable and offered without hubris

- It's worth noting in the post above, Trezor are very clear that physical attacks are not a significant part of the threat model they're trying to address. Bitfi, conversely, have been very clear that it is for them.

- If I was asked to recommend a hardware wallet, the recommendation would be based as much on the vendor's visible approach to issues with their product, whether that's user support, responding to criticism, or responses to security issues.

My interest in looking arose purely as a result of the combatative and uncompromising approach of the @TheBitFi and @KhesinDaniel Twitter accounts.

My experience in those threads, combined with the unrelenting claims that users could verify their devices (later denied by their own dev team) instilled a familiar feeling of bloody mindedness which tends to lead to me delving into things I'd otherwise have little interest in.

For me, this whole thing began with me commenting on the impact the tone of their communications has, so it seems fitting that I close out with a similar observation.

BitFi has a CTO who is clearly invested in finding and addressing issues. Bugs and vulnerabilities happen, but can generally be fixed (though sometimes this might mean replacement hardware - noone ever said it has to be easy).

What cannot easily be fixed, however, is the damage to a potential purchasers trust when they see your company's official SM account screeching abuse at someone who's reported a security vulnerability to you. What potential customer isn't going to ask themselves how they might be handled if they contact you to report their device DOA, or some other issue? The distrust that that instills can rub off onto your partners as well.

Meanwhile, your competition is responding to criticism calmly and professionally.

I know which of the two would get my money, and I suspect most buyers would probably feel the same.

Updates

Bitfi have released an acknowledgement of the issues.

Equally importantly, though, they've also now created and published a vulnerability disclosure policy, including contact details for the reporting of issues (for avoidance of doubt, that policy was not available/present prior to publishing of this post).

Earlier in this article, I made reference to their dev team's investment in finding and addressing issues - the quick turnaround in getting an acknowledgement published and identifying and filling a policy gap is a product of that.